Merge 290025958f into 454c0b2e7e

This commit is contained in:

commit

e44ff512fa

|

|

@ -1 +1,10 @@

|

|||

custom: https://zeronet.io/docs/help_zeronet/donate/

|

||||

github: canewsin

|

||||

patreon: # Replace with a single Patreon username e.g., user1

|

||||

open_collective: # Replace with a single Open Collective username e.g., user1

|

||||

ko_fi: canewsin

|

||||

tidelift: # Replace with a single Tidelift platform-name/package-name e.g., npm/babel

|

||||

community_bridge: # Replace with a single Community Bridge project-name e.g., cloud-foundry

|

||||

liberapay: canewsin

|

||||

issuehunt: # Replace with a single IssueHunt username e.g., user1

|

||||

otechie: # Replace with a single Otechie username e.g., user1

|

||||

custom: ['https://paypal.me/PramUkesh', 'https://zerolink.ml/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/']

|

||||

|

|

|

|||

|

|

@ -0,0 +1,72 @@

|

|||

# For most projects, this workflow file will not need changing; you simply need

|

||||

# to commit it to your repository.

|

||||

#

|

||||

# You may wish to alter this file to override the set of languages analyzed,

|

||||

# or to provide custom queries or build logic.

|

||||

#

|

||||

# ******** NOTE ********

|

||||

# We have attempted to detect the languages in your repository. Please check

|

||||

# the `language` matrix defined below to confirm you have the correct set of

|

||||

# supported CodeQL languages.

|

||||

#

|

||||

name: "CodeQL"

|

||||

|

||||

on:

|

||||

push:

|

||||

branches: [ py3-latest ]

|

||||

pull_request:

|

||||

# The branches below must be a subset of the branches above

|

||||

branches: [ py3-latest ]

|

||||

schedule:

|

||||

- cron: '32 19 * * 2'

|

||||

|

||||

jobs:

|

||||

analyze:

|

||||

name: Analyze

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

actions: read

|

||||

contents: read

|

||||

security-events: write

|

||||

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

language: [ 'javascript', 'python' ]

|

||||

# CodeQL supports [ 'cpp', 'csharp', 'go', 'java', 'javascript', 'python', 'ruby' ]

|

||||

# Learn more about CodeQL language support at https://aka.ms/codeql-docs/language-support

|

||||

|

||||

steps:

|

||||

- name: Checkout repository

|

||||

uses: actions/checkout@v3

|

||||

|

||||

# Initializes the CodeQL tools for scanning.

|

||||

- name: Initialize CodeQL

|

||||

uses: github/codeql-action/init@v2

|

||||

with:

|

||||

languages: ${{ matrix.language }}

|

||||

# If you wish to specify custom queries, you can do so here or in a config file.

|

||||

# By default, queries listed here will override any specified in a config file.

|

||||

# Prefix the list here with "+" to use these queries and those in the config file.

|

||||

|

||||

# Details on CodeQL's query packs refer to : https://docs.github.com/en/code-security/code-scanning/automatically-scanning-your-code-for-vulnerabilities-and-errors/configuring-code-scanning#using-queries-in-ql-packs

|

||||

# queries: security-extended,security-and-quality

|

||||

|

||||

|

||||

# Autobuild attempts to build any compiled languages (C/C++, C#, or Java).

|

||||

# If this step fails, then you should remove it and run the build manually (see below)

|

||||

- name: Autobuild

|

||||

uses: github/codeql-action/autobuild@v2

|

||||

|

||||

# ℹ️ Command-line programs to run using the OS shell.

|

||||

# 📚 See https://docs.github.com/en/actions/using-workflows/workflow-syntax-for-github-actions#jobsjob_idstepsrun

|

||||

|

||||

# If the Autobuild fails above, remove it and uncomment the following three lines.

|

||||

# modify them (or add more) to build your code if your project, please refer to the EXAMPLE below for guidance.

|

||||

|

||||

# - run: |

|

||||

# echo "Run, Build Application using script"

|

||||

# ./location_of_script_within_repo/buildscript.sh

|

||||

|

||||

- name: Perform CodeQL Analysis

|

||||

uses: github/codeql-action/analyze@v2

|

||||

|

|

@ -4,46 +4,48 @@ on: [push, pull_request]

|

|||

|

||||

jobs:

|

||||

test:

|

||||

|

||||

runs-on: ubuntu-16.04

|

||||

runs-on: ubuntu-20.04

|

||||

strategy:

|

||||

max-parallel: 16

|

||||

matrix:

|

||||

python-version: [3.5, 3.6, 3.7, 3.8, 3.9]

|

||||

python-version: ["3.7", "3.8", "3.9"]

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v2

|

||||

- name: Checkout ZeroNet

|

||||

uses: actions/checkout@v2

|

||||

with:

|

||||

submodules: "true"

|

||||

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v1

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v1

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

|

||||

- name: Prepare for installation

|

||||

run: |

|

||||

python3 -m pip install setuptools

|

||||

python3 -m pip install --upgrade pip wheel

|

||||

python3 -m pip install --upgrade codecov coveralls flake8 mock pytest==4.6.3 pytest-cov selenium

|

||||

- name: Prepare for installation

|

||||

run: |

|

||||

python3 -m pip install setuptools

|

||||

python3 -m pip install --upgrade pip wheel

|

||||

python3 -m pip install --upgrade codecov coveralls flake8 mock pytest==4.6.3 pytest-cov selenium

|

||||

|

||||

- name: Install

|

||||

run: |

|

||||

python3 -m pip install --upgrade -r requirements.txt

|

||||

python3 -m pip list

|

||||

- name: Install

|

||||

run: |

|

||||

python3 -m pip install --upgrade -r requirements.txt

|

||||

python3 -m pip list

|

||||

|

||||

- name: Prepare for tests

|

||||

run: |

|

||||

openssl version -a

|

||||

echo 0 | sudo tee /proc/sys/net/ipv6/conf/all/disable_ipv6

|

||||

- name: Prepare for tests

|

||||

run: |

|

||||

openssl version -a

|

||||

echo 0 | sudo tee /proc/sys/net/ipv6/conf/all/disable_ipv6

|

||||

|

||||

- name: Test

|

||||

run: |

|

||||

catchsegv python3 -m pytest src/Test --cov=src --cov-config src/Test/coverage.ini

|

||||

export ZERONET_LOG_DIR="log/CryptMessage"; catchsegv python3 -m pytest -x plugins/CryptMessage/Test

|

||||

export ZERONET_LOG_DIR="log/Bigfile"; catchsegv python3 -m pytest -x plugins/Bigfile/Test

|

||||

export ZERONET_LOG_DIR="log/AnnounceLocal"; catchsegv python3 -m pytest -x plugins/AnnounceLocal/Test

|

||||

export ZERONET_LOG_DIR="log/OptionalManager"; catchsegv python3 -m pytest -x plugins/OptionalManager/Test

|

||||

export ZERONET_LOG_DIR="log/Multiuser"; mv plugins/disabled-Multiuser plugins/Multiuser && catchsegv python -m pytest -x plugins/Multiuser/Test

|

||||

export ZERONET_LOG_DIR="log/Bootstrapper"; mv plugins/disabled-Bootstrapper plugins/Bootstrapper && catchsegv python -m pytest -x plugins/Bootstrapper/Test

|

||||

find src -name "*.json" | xargs -n 1 python3 -c "import json, sys; print(sys.argv[1], end=' '); json.load(open(sys.argv[1])); print('[OK]')"

|

||||

find plugins -name "*.json" | xargs -n 1 python3 -c "import json, sys; print(sys.argv[1], end=' '); json.load(open(sys.argv[1])); print('[OK]')"

|

||||

flake8 . --count --select=E9,F63,F72,F82 --show-source --statistics --exclude=src/lib/pyaes/

|

||||

- name: Test

|

||||

run: |

|

||||

catchsegv python3 -m pytest src/Test --cov=src --cov-config src/Test/coverage.ini

|

||||

export ZERONET_LOG_DIR="log/CryptMessage"; catchsegv python3 -m pytest -x plugins/CryptMessage/Test

|

||||

export ZERONET_LOG_DIR="log/Bigfile"; catchsegv python3 -m pytest -x plugins/Bigfile/Test

|

||||

export ZERONET_LOG_DIR="log/AnnounceLocal"; catchsegv python3 -m pytest -x plugins/AnnounceLocal/Test

|

||||

export ZERONET_LOG_DIR="log/OptionalManager"; catchsegv python3 -m pytest -x plugins/OptionalManager/Test

|

||||

export ZERONET_LOG_DIR="log/Multiuser"; mv plugins/disabled-Multiuser plugins/Multiuser && catchsegv python -m pytest -x plugins/Multiuser/Test

|

||||

export ZERONET_LOG_DIR="log/Bootstrapper"; mv plugins/disabled-Bootstrapper plugins/Bootstrapper && catchsegv python -m pytest -x plugins/Bootstrapper/Test

|

||||

find src -name "*.json" | xargs -n 1 python3 -c "import json, sys; print(sys.argv[1], end=' '); json.load(open(sys.argv[1])); print('[OK]')"

|

||||

find plugins -name "*.json" | xargs -n 1 python3 -c "import json, sys; print(sys.argv[1], end=' '); json.load(open(sys.argv[1])); print('[OK]')"

|

||||

flake8 . --count --select=E9,F63,F72,F82 --show-source --statistics --exclude=src/lib/pyaes/

|

||||

|

|

|

|||

|

|

@ -0,0 +1,3 @@

|

|||

[submodule "plugins"]

|

||||

path = plugins

|

||||

url = https://github.com/ZeroNetX/ZeroNet-Plugins.git

|

||||

81

CHANGELOG.md

81

CHANGELOG.md

|

|

@ -1,6 +1,85 @@

|

|||

### ZeroNet 0.7.2 (2020-09-?) Rev4206?

|

||||

### ZeroNet 0.9.0 (2023-07-12) Rev4630

|

||||

- Fix RDos Issue in Plugins https://github.com/ZeroNetX/ZeroNet-Plugins/pull/9

|

||||

- Add trackers to Config.py for failsafety incase missing trackers.txt

|

||||

- Added Proxy links

|

||||

- Fix pysha3 dep installation issue

|

||||

- FileRequest -> Remove Unnecessary check, Fix error wording

|

||||

- Fix Response when site is missing for `actionAs`

|

||||

|

||||

|

||||

### ZeroNet 0.8.5 (2023-02-12) Rev4625

|

||||

- Fix(https://github.com/ZeroNetX/ZeroNet/pull/202) for SSL cert gen failed on Windows.

|

||||

- default theme-class for missing value in `users.json`.

|

||||

- Fetch Stats Plugin changes.

|

||||

|

||||

### ZeroNet 0.8.4 (2022-12-12) Rev4620

|

||||

- Increase Minimum Site size to 25MB.

|

||||

|

||||

### ZeroNet 0.8.3 (2022-12-11) Rev4611

|

||||

- main.py -> Fix accessing unassigned varible

|

||||

- ContentManager -> Support for multiSig

|

||||

- SiteStrorage.py -> Fix accessing unassigned varible

|

||||

- ContentManager.py Improve Logging of Valid Signers

|

||||

|

||||

### ZeroNet 0.8.2 (2022-11-01) Rev4610

|

||||

- Fix Startup Error when plugins dir missing

|

||||

- Move trackers to seperate file & Add more trackers

|

||||

- Config:: Skip loading missing tracker files

|

||||

- Added documentation for getRandomPort fn

|

||||

|

||||

### ZeroNet 0.8.1 (2022-10-01) Rev4600

|

||||

- fix readdress loop (cherry-pick previously added commit from conservancy)

|

||||

- Remove Patreon badge

|

||||

- Update README-ru.md (#177)

|

||||

- Include inner_path of failed request for signing in error msg and response

|

||||

- Don't Fail Silently When Cert is Not Selected

|

||||

- Console Log Updates, Specify min supported ZeroNet version for Rust version Protocol Compatibility

|

||||

- Update FUNDING.yml

|

||||

|

||||

### ZeroNet 0.8.0 (2022-05-27) Rev4591

|

||||

- Revert File Open to catch File Access Errors.

|

||||

|

||||

### ZeroNet 0.7.9-patch (2022-05-26) Rev4586

|

||||

- Use xescape(s) from zeronet-conservancy

|

||||

- actionUpdate response Optimisation

|

||||

- Fetch Plugins Repo Updates

|

||||

- Fix Unhandled File Access Errors

|

||||

- Create codeql-analysis.yml

|

||||

|

||||

### ZeroNet 0.7.9 (2022-05-26) Rev4585

|

||||

- Rust Version Compatibility for update Protocol msg

|

||||

- Removed Non Working Trakers.

|

||||

- Dynamically Load Trackers from Dashboard Site.

|

||||

- Tracker Supply Improvements.

|

||||

- Fix Repo Url for Bug Report

|

||||

- First Party Tracker Update Service using Dashboard Site.

|

||||

- remove old v2 onion service [#158](https://github.com/ZeroNetX/ZeroNet/pull/158)

|

||||

|

||||

### ZeroNet 0.7.8 (2022-03-02) Rev4580

|

||||

- Update Plugins with some bug fixes and Improvements

|

||||

|

||||

### ZeroNet 0.7.6 (2022-01-12) Rev4565

|

||||

- Sync Plugin Updates

|

||||

- Clean up tor v3 patch [#115](https://github.com/ZeroNetX/ZeroNet/pull/115)

|

||||

- Add More Default Plugins to Repo

|

||||

- Doubled Site Publish Limits

|

||||

- Update ZeroNet Repo Urls [#103](https://github.com/ZeroNetX/ZeroNet/pull/103)

|

||||

- UI/UX: Increases Size of Notifications Close Button [#106](https://github.com/ZeroNetX/ZeroNet/pull/106)

|

||||

- Moved Plugins to Seperate Repo

|

||||

- Added `access_key` variable in Config, this used to access restrited plugins when multiuser plugin is enabled. When MultiUserPlugin is enabled we cannot access some pages like /Stats, this key will remove such restriction with access key.

|

||||

- Added `last_connection_id_current_version` to ConnectionServer, helpful to estimate no of connection from current client version.

|

||||

- Added current version: connections to /Stats page. see the previous point.

|

||||

|

||||

### ZeroNet 0.7.5 (2021-11-28) Rev4560

|

||||

- Add more default trackers

|

||||

- Change default homepage address to `1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d`

|

||||

- Change default update site address to `1Update8crprmciJHwp2WXqkx2c4iYp18`

|

||||

|

||||

### ZeroNet 0.7.3 (2021-11-28) Rev4555

|

||||

- Fix xrange is undefined error

|

||||

- Fix Incorrect viewport on mobile while loading

|

||||

- Tor-V3 Patch by anonymoose

|

||||

|

||||

|

||||

### ZeroNet 0.7.1 (2019-07-01) Rev4206

|

||||

### Added

|

||||

|

|

|

|||

12

Dockerfile

12

Dockerfile

|

|

@ -1,4 +1,4 @@

|

|||

FROM alpine:3.11

|

||||

FROM alpine:3.15

|

||||

|

||||

#Base settings

|

||||

ENV HOME /root

|

||||

|

|

@ -6,9 +6,9 @@ ENV HOME /root

|

|||

COPY requirements.txt /root/requirements.txt

|

||||

|

||||

#Install ZeroNet

|

||||

RUN apk --update --no-cache --no-progress add python3 python3-dev gcc libffi-dev musl-dev make tor openssl \

|

||||

RUN apk --update --no-cache --no-progress add python3 python3-dev py3-pip gcc g++ autoconf automake libtool libffi-dev musl-dev make tor openssl \

|

||||

&& pip3 install -r /root/requirements.txt \

|

||||

&& apk del python3-dev gcc libffi-dev musl-dev make \

|

||||

&& apk del python3-dev gcc g++ autoconf automake libtool libffi-dev musl-dev make \

|

||||

&& echo "ControlPort 9051" >> /etc/tor/torrc \

|

||||

&& echo "CookieAuthentication 1" >> /etc/tor/torrc

|

||||

|

||||

|

|

@ -22,12 +22,12 @@ COPY . /root

|

|||

VOLUME /root/data

|

||||

|

||||

#Control if Tor proxy is started

|

||||

ENV ENABLE_TOR false

|

||||

ENV ENABLE_TOR true

|

||||

|

||||

WORKDIR /root

|

||||

|

||||

#Set upstart command

|

||||

CMD (! ${ENABLE_TOR} || tor&) && python3 zeronet.py --ui_ip 0.0.0.0 --fileserver_port 26552

|

||||

CMD (! ${ENABLE_TOR} || tor&) && python3 zeronet.py --ui_ip 0.0.0.0 --fileserver_port 26117

|

||||

|

||||

#Expose ports

|

||||

EXPOSE 43110 26552

|

||||

EXPOSE 43110 26117

|

||||

|

|

|

|||

250

README-ru.md

250

README-ru.md

|

|

@ -1,211 +1,133 @@

|

|||

# ZeroNet [](https://travis-ci.org/HelloZeroNet/ZeroNet) [](https://zeronet.io/docs/faq/) [](https://zeronet.io/docs/help_zeronet/donate/)

|

||||

# ZeroNet [](https://github.com/ZeroNetX/ZeroNet/actions/workflows/tests.yml) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/) [](https://hub.docker.com/r/canewsin/zeronet)

|

||||

|

||||

[简体中文](./README-zh-cn.md)

|

||||

[English](./README.md)

|

||||

|

||||

Децентрализованные вебсайты использующие Bitcoin криптографию и BitTorrent сеть - https://zeronet.io

|

||||

|

||||

Децентрализованные вебсайты, использующие криптографию Bitcoin и протокол BitTorrent — https://zeronet.dev ([Зеркало в ZeroNet](http://127.0.0.1:43110/1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX/)). В отличии от Bitcoin, ZeroNet'у не требуется блокчейн для работы, однако он использует ту же криптографию, чтобы обеспечить сохранность и проверку данных.

|

||||

|

||||

## Зачем?

|

||||

|

||||

* Мы верим в открытую, свободную, и не отцензуренную сеть и коммуникацию.

|

||||

* Нет единой точки отказа: Сайт онлайн пока по крайней мере 1 пир обслуживает его.

|

||||

* Никаких затрат на хостинг: Сайты обслуживаются посетителями.

|

||||

* Невозможно отключить: Он нигде, потому что он везде.

|

||||

* Быстр и работает оффлайн: Вы можете получить доступ к сайту, даже если Интернет недоступен.

|

||||

|

||||

- Мы верим в открытую, свободную, и неподдающуюся цензуре сеть и связь.

|

||||

- Нет единой точки отказа: Сайт остаётся онлайн, пока его обслуживает хотя бы 1 пир.

|

||||

- Нет затрат на хостинг: Сайты обслуживаются посетителями.

|

||||

- Невозможно отключить: Он нигде, потому что он везде.

|

||||

- Скорость и возможность работать без Интернета: Вы сможете получить доступ к сайту, потому что его копия хранится на вашем компьютере и у ваших пиров.

|

||||

|

||||

## Особенности

|

||||

* Обновляемые в реальном времени сайты

|

||||

* Поддержка Namecoin .bit доменов

|

||||

* Лёгок в установке: распаковал & запустил

|

||||

* Клонирование вебсайтов в один клик

|

||||

* Password-less [BIP32](https://github.com/bitcoin/bips/blob/master/bip-0032.mediawiki)

|

||||

based authorization: Ваша учетная запись защищена той же криптографией, что и ваш Bitcoin-кошелек

|

||||

* Встроенный SQL-сервер с синхронизацией данных P2P: Позволяет упростить разработку сайта и ускорить загрузку страницы

|

||||

* Анонимность: Полная поддержка сети Tor с помощью скрытых служб .onion вместо адресов IPv4

|

||||

* TLS зашифрованные связи

|

||||

* Автоматическое открытие uPnP порта

|

||||

* Плагин для поддержки многопользовательской (openproxy)

|

||||

* Работает с любыми браузерами и операционными системами

|

||||

|

||||

- Обновление сайтов в реальном времени

|

||||

- Поддержка доменов `.bit` ([Namecoin](https://www.namecoin.org))

|

||||

- Легкая установка: просто распакуйте и запустите

|

||||

- Клонирование сайтов "в один клик"

|

||||

- Беспарольная [BIP32](https://github.com/bitcoin/bips/blob/master/bip-0032.mediawiki)

|

||||

авторизация: Ваша учетная запись защищена той же криптографией, что и ваш Bitcoin-кошелек

|

||||

- Встроенный SQL-сервер с синхронизацией данных P2P: Позволяет упростить разработку сайта и ускорить загрузку страницы

|

||||

- Анонимность: Полная поддержка сети Tor, используя скрытые службы `.onion` вместо адресов IPv4

|

||||

- Зашифрованное TLS подключение

|

||||

- Автоматическое открытие UPnP–порта

|

||||

- Плагин для поддержки нескольких пользователей (openproxy)

|

||||

- Работа с любыми браузерами и операционными системами

|

||||

|

||||

## Текущие ограничения

|

||||

|

||||

- Файловые транзакции не сжаты

|

||||

- Нет приватных сайтов

|

||||

|

||||

## Как это работает?

|

||||

|

||||

* После запуска `zeronet.py` вы сможете посетить зайты (zeronet сайты) используя адрес

|

||||

`http://127.0.0.1:43110/{zeronet_address}`

|

||||

(например. `http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D`).

|

||||

* Когда вы посещаете новый сайт zeronet, он пытается найти пиров с помощью BitTorrent

|

||||

чтобы загрузить файлы сайтов (html, css, js ...) из них.

|

||||

* Каждый посещенный зайт также обслуживается вами. (Т.е хранится у вас на компьютере)

|

||||

* Каждый сайт содержит файл `content.json`, который содержит все остальные файлы в хэше sha512

|

||||

и подпись, созданную с использованием частного ключа сайта.

|

||||

* Если владелец сайта (у которого есть закрытый ключ для адреса сайта) изменяет сайт, то он/она

|

||||

- После запуска `zeronet.py` вы сможете посещать сайты в ZeroNet, используя адрес

|

||||

`http://127.0.0.1:43110/{zeronet_адрес}`

|

||||

(Например: `http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d`).

|

||||

- Когда вы посещаете новый сайт в ZeroNet, он пытается найти пиров с помощью протокола BitTorrent,

|

||||

чтобы скачать у них файлы сайта (HTML, CSS, JS и т.д.).

|

||||

- После посещения сайта вы тоже становитесь его пиром.

|

||||

- Каждый сайт содержит файл `content.json`, который содержит SHA512 хеши всех остальные файлы

|

||||

и подпись, созданную с помощью закрытого ключа сайта.

|

||||

- Если владелец сайта (тот, кто владеет закрытым ключом для адреса сайта) изменяет сайт, он

|

||||

подписывает новый `content.json` и публикует его для пиров. После этого пиры проверяют целостность `content.json`

|

||||

(используя подпись), они загружают измененные файлы и публикуют новый контент для других пиров.

|

||||

|

||||

#### [Слайд-шоу о криптографии ZeroNet, обновлениях сайтов, многопользовательских сайтах »](https://docs.google.com/presentation/d/1_2qK1IuOKJ51pgBvllZ9Yu7Au2l551t3XBgyTSvilew/pub?start=false&loop=false&delayms=3000)

|

||||

#### [Часто задаваемые вопросы »](https://zeronet.io/docs/faq/)

|

||||

|

||||

#### [Документация разработчика ZeroNet »](https://zeronet.io/docs/site_development/getting_started/)

|

||||

(используя подпись), скачвают изменённые файлы и распространяют новый контент для других пиров.

|

||||

|

||||

[Презентация о криптографии ZeroNet, обновлениях сайтов, многопользовательских сайтах »](https://docs.google.com/presentation/d/1_2qK1IuOKJ51pgBvllZ9Yu7Au2l551t3XBgyTSvilew/pub?start=false&loop=false&delayms=3000)

|

||||

[Часто задаваемые вопросы »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/)

|

||||

[Документация разработчика ZeroNet »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

|

||||

|

||||

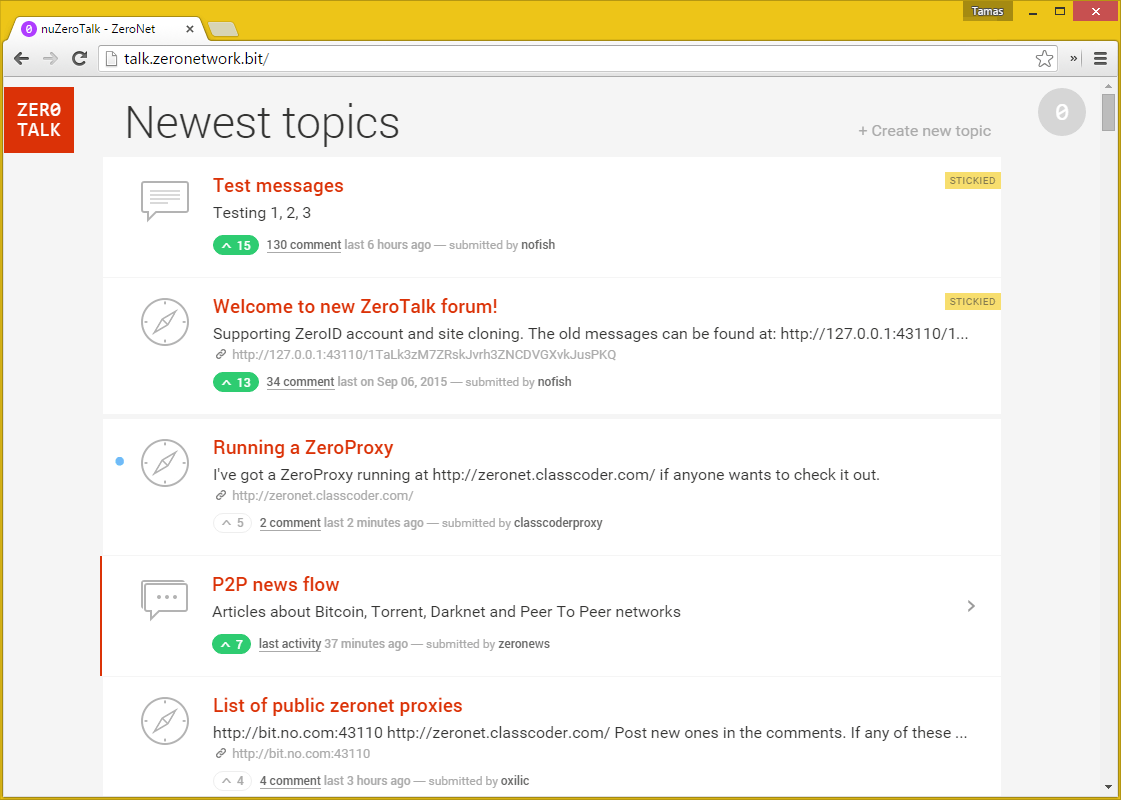

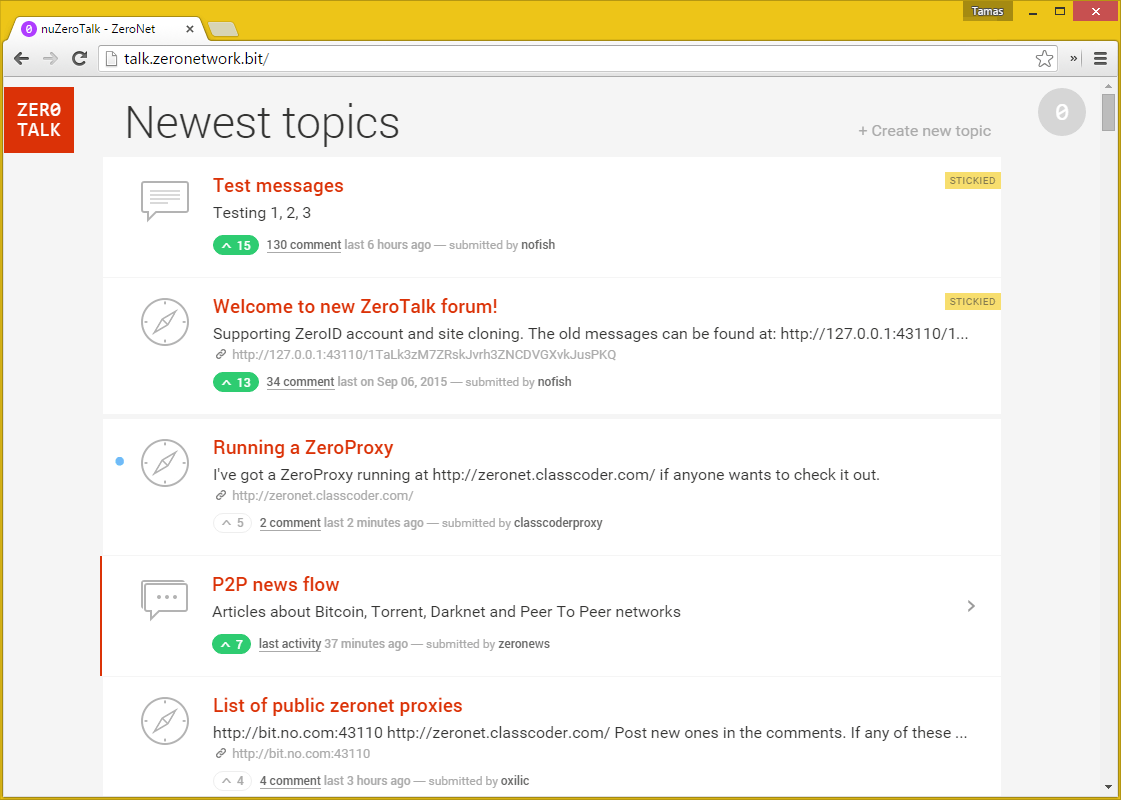

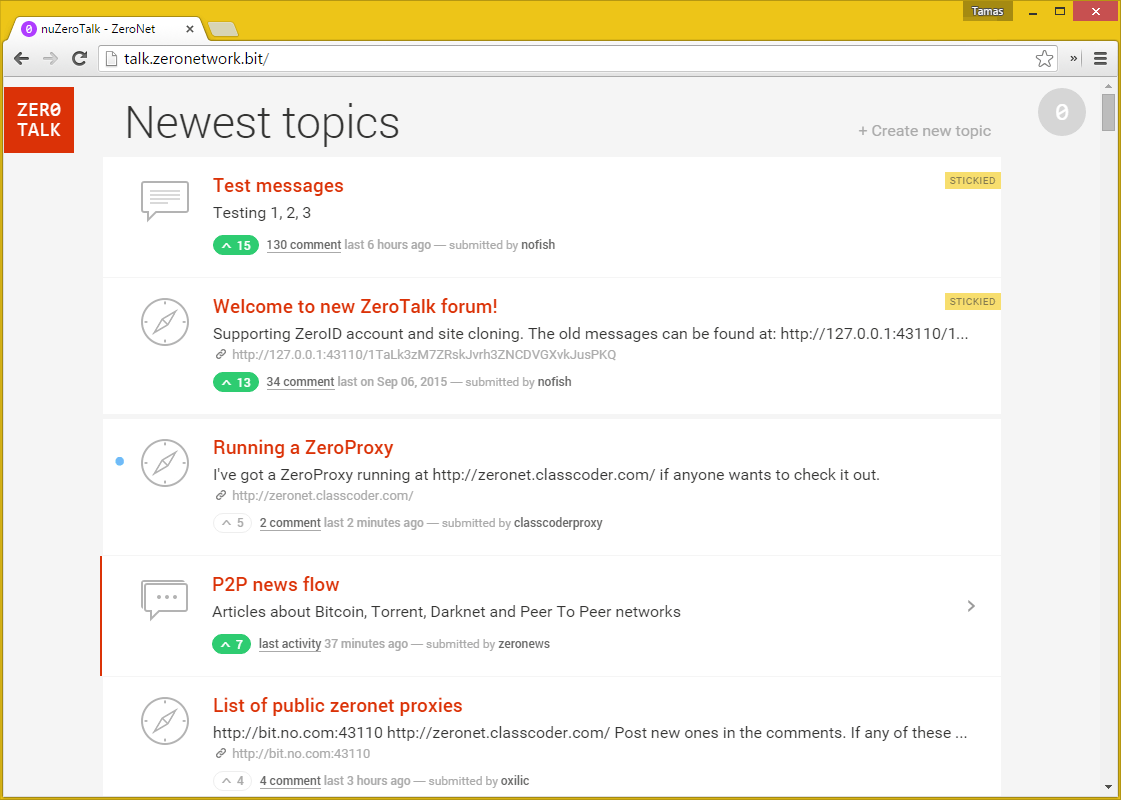

## Скриншоты

|

||||

|

||||

|

||||

|

||||

[Больше скриншотов в документации ZeroNet »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/using_zeronet/sample_sites/)

|

||||

|

||||

#### [Больше скриншотов в ZeroNet документации »](https://zeronet.io/docs/using_zeronet/sample_sites/)

|

||||

## Как присоединиться?

|

||||

|

||||

### Windows

|

||||

|

||||

## Как вступить

|

||||

- Скачайте и распакуйте архив [ZeroNet-win.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-win.zip) (26МБ)

|

||||

- Запустите `ZeroNet.exe`

|

||||

|

||||

* Скачайте ZeroBundle пакет:

|

||||

* [Microsoft Windows](https://github.com/HelloZeroNet/ZeroNet-win/archive/dist/ZeroNet-win.zip)

|

||||

* [Apple macOS](https://github.com/HelloZeroNet/ZeroNet-mac/archive/dist/ZeroNet-mac.zip)

|

||||

* [Linux 64-bit](https://github.com/HelloZeroNet/ZeroBundle/raw/master/dist/ZeroBundle-linux64.tar.gz)

|

||||

* [Linux 32-bit](https://github.com/HelloZeroNet/ZeroBundle/raw/master/dist/ZeroBundle-linux32.tar.gz)

|

||||

* Распакуйте где угодно

|

||||

* Запустите `ZeroNet.exe` (win), `ZeroNet(.app)` (osx), `ZeroNet.sh` (linux)

|

||||

### macOS

|

||||

|

||||

### Linux терминал

|

||||

- Скачайте и распакуйте архив [ZeroNet-mac.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-mac.zip) (14МБ)

|

||||

- Запустите `ZeroNet.app`

|

||||

|

||||

* `wget https://github.com/HelloZeroNet/ZeroBundle/raw/master/dist/ZeroBundle-linux64.tar.gz`

|

||||

* `tar xvpfz ZeroBundle-linux64.tar.gz`

|

||||

* `cd ZeroBundle`

|

||||

* Запустите с помощью `./ZeroNet.sh`

|

||||

### Linux (64 бит)

|

||||

|

||||

Он загружает последнюю версию ZeroNet, затем запускает её автоматически.

|

||||

- Скачайте и распакуйте архив [ZeroNet-linux.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-linux.zip) (14МБ)

|

||||

- Запустите `./ZeroNet.sh`

|

||||

|

||||

#### Ручная установка для Debian Linux

|

||||

> **Note**

|

||||

> Запустите таким образом: `./ZeroNet.sh --ui_ip '*' --ui_restrict ваш_ip_адрес`, чтобы разрешить удалённое подключение к веб–интерфейсу.

|

||||

|

||||

* `sudo apt-get update`

|

||||

* `sudo apt-get install msgpack-python python-gevent`

|

||||

* `wget https://github.com/HelloZeroNet/ZeroNet/archive/master.tar.gz`

|

||||

* `tar xvpfz master.tar.gz`

|

||||

* `cd ZeroNet-master`

|

||||

* Запустите с помощью `python2 zeronet.py`

|

||||

* Откройте http://127.0.0.1:43110/ в вашем браузере.

|

||||

### Docker

|

||||

|

||||

### [Arch Linux](https://www.archlinux.org)

|

||||

Официальный образ находится здесь: https://hub.docker.com/r/canewsin/zeronet/

|

||||

|

||||

* `git clone https://aur.archlinux.org/zeronet.git`

|

||||

* `cd zeronet`

|

||||

* `makepkg -srci`

|

||||

* `systemctl start zeronet`

|

||||

* Откройте http://127.0.0.1:43110/ в вашем браузере.

|

||||

### Android (arm, arm64, x86)

|

||||

|

||||

Смотрите [ArchWiki](https://wiki.archlinux.org)'s [ZeroNet

|

||||

article](https://wiki.archlinux.org/index.php/ZeroNet) для дальнейшей помощи.

|

||||

- Для работы требуется Android как минимум версии 5.0 Lollipop

|

||||

- [<img src="https://play.google.com/intl/en_us/badges/images/generic/en_badge_web_generic.png"

|

||||

alt="Download from Google Play"

|

||||

height="80">](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

|

||||

- Скачать APK: https://github.com/canewsin/zeronet_mobile/releases

|

||||

|

||||

### [Gentoo Linux](https://www.gentoo.org)

|

||||

### Android (arm, arm64, x86) Облегчённый клиент только для просмотра (1МБ)

|

||||

|

||||

* [`layman -a raiagent`](https://github.com/leycec/raiagent)

|

||||

* `echo '>=net-vpn/zeronet-0.5.4' >> /etc/portage/package.accept_keywords`

|

||||

* *(Опционально)* Включить поддержку Tor: `echo 'net-vpn/zeronet tor' >>

|

||||

/etc/portage/package.use`

|

||||

* `emerge zeronet`

|

||||

* `rc-service zeronet start`

|

||||

* Откройте http://127.0.0.1:43110/ в вашем браузере.

|

||||

- Для работы требуется Android как минимум версии 4.1 Jelly Bean

|

||||

- [<img src="https://play.google.com/intl/en_us/badges/images/generic/en_badge_web_generic.png"

|

||||

alt="Download from Google Play"

|

||||

height="80">](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

|

||||

|

||||

Смотрите `/usr/share/doc/zeronet-*/README.gentoo.bz2` для дальнейшей помощи.

|

||||

### Установка из исходного кода

|

||||

|

||||

### [FreeBSD](https://www.freebsd.org/)

|

||||

|

||||

* `pkg install zeronet` or `cd /usr/ports/security/zeronet/ && make install clean`

|

||||

* `sysrc zeronet_enable="YES"`

|

||||

* `service zeronet start`

|

||||

* Откройте http://127.0.0.1:43110/ в вашем браузере.

|

||||

|

||||

### [Vagrant](https://www.vagrantup.com/)

|

||||

|

||||

* `vagrant up`

|

||||

* Подключитесь к VM с помощью `vagrant ssh`

|

||||

* `cd /vagrant`

|

||||

* Запустите `python2 zeronet.py --ui_ip 0.0.0.0`

|

||||

* Откройте http://127.0.0.1:43110/ в вашем браузере.

|

||||

|

||||

### [Docker](https://www.docker.com/)

|

||||

* `docker run -d -v <local_data_folder>:/root/data -p 15441:15441 -p 127.0.0.1:43110:43110 nofish/zeronet`

|

||||

* Это изображение Docker включает в себя прокси-сервер Tor, который по умолчанию отключён.

|

||||

Остерегайтесь что некоторые хостинг-провайдеры могут не позволить вам запускать Tor на своих серверах.

|

||||

Если вы хотите включить его,установите переменную среды `ENABLE_TOR` в` true` (по умолчанию: `false`) Например:

|

||||

|

||||

`docker run -d -e "ENABLE_TOR=true" -v <local_data_folder>:/root/data -p 15441:15441 -p 127.0.0.1:43110:43110 nofish/zeronet`

|

||||

* Откройте http://127.0.0.1:43110/ в вашем браузере.

|

||||

|

||||

### [Virtualenv](https://virtualenv.readthedocs.org/en/latest/)

|

||||

|

||||

* `virtualenv env`

|

||||

* `source env/bin/activate`

|

||||

* `pip install msgpack gevent`

|

||||

* `python2 zeronet.py`

|

||||

* Откройте http://127.0.0.1:43110/ в вашем браузере.

|

||||

|

||||

## Текущие ограничения

|

||||

|

||||

* ~~Нет torrent-похожего файла разделения для поддержки больших файлов~~ (поддержка больших файлов добавлена)

|

||||

* ~~Не анонимнее чем Bittorrent~~ (добавлена встроенная поддержка Tor)

|

||||

* Файловые транзакции не сжаты ~~ или незашифрованы еще ~~ (добавлено шифрование TLS)

|

||||

* Нет приватных сайтов

|

||||

|

||||

|

||||

## Как я могу создать сайт в Zeronet?

|

||||

|

||||

Завершите работу zeronet, если он запущен

|

||||

|

||||

```bash

|

||||

$ zeronet.py siteCreate

|

||||

...

|

||||

- Site private key (Приватный ключ сайта): 23DKQpzxhbVBrAtvLEc2uvk7DZweh4qL3fn3jpM3LgHDczMK2TtYUq

|

||||

- Site address (Адрес сайта): 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

|

||||

...

|

||||

- Site created! (Сайт создан)

|

||||

$ zeronet.py

|

||||

...

|

||||

```sh

|

||||

wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-src.zip

|

||||

unzip ZeroNet-src.zip

|

||||

cd ZeroNet

|

||||

sudo apt-get update

|

||||

sudo apt-get install python3-pip

|

||||

sudo python3 -m pip install -r requirements.txt

|

||||

```

|

||||

- Запустите `python3 zeronet.py`

|

||||

|

||||

Поздравляем, вы закончили! Теперь каждый может получить доступ к вашему зайту используя

|

||||

`http://localhost:43110/13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2`

|

||||

Откройте приветственную страницу ZeroHello в вашем браузере по ссылке http://127.0.0.1:43110/

|

||||

|

||||

Следующие шаги: [ZeroNet Developer Documentation](https://zeronet.io/docs/site_development/getting_started/)

|

||||

## Как мне создать сайт в ZeroNet?

|

||||

|

||||

- Кликните на **⋮** > **"Create new, empty site"** в меню на сайте [ZeroHello](http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d).

|

||||

- Вы будете **перенаправлены** на совершенно новый сайт, который может быть изменён только вами!

|

||||

- Вы можете найти и изменить контент вашего сайта в каталоге **data/[адрес_вашего_сайта]**

|

||||

- После изменений откройте ваш сайт, переключите влево кнопку "0" в правом верхнем углу, затем нажмите кнопки **sign** и **publish** внизу

|

||||

|

||||

## Как я могу модифицировать Zeronet сайт?

|

||||

|

||||

* Измените файлы расположенные в data/13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2 директории.

|

||||

Когда закончите с изменением:

|

||||

|

||||

```bash

|

||||

$ zeronet.py siteSign 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

|

||||

- Signing site (Подпись сайта): 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2...

|

||||

Private key (Приватный ключ) (input hidden):

|

||||

```

|

||||

|

||||

* Введите секретный ключ, который вы получили при создании сайта, потом:

|

||||

|

||||

```bash

|

||||

$ zeronet.py sitePublish 13DNDkMUExRf9Xa9ogwPKqp7zyHFEqbhC2

|

||||

...

|

||||

Site:13DNDk..bhC2 Publishing to 3/10 peers...

|

||||

Site:13DNDk..bhC2 Successfuly published to 3 peers

|

||||

- Serving files....

|

||||

```

|

||||

|

||||

* Вот и всё! Вы успешно подписали и опубликовали свои изменения.

|

||||

|

||||

Следующие шаги: [Документация разработчика ZeroNet](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

|

||||

|

||||

## Поддержите проект

|

||||

|

||||

- Bitcoin: 1QDhxQ6PraUZa21ET5fYUCPgdrwBomnFgX

|

||||

- Paypal: https://zeronet.io/docs/help_zeronet/donate/

|

||||

|

||||

### Спонсоры

|

||||

|

||||

* Улучшенная совместимость с MacOS / Safari стала возможной благодаря [BrowserStack.com](https://www.browserstack.com)

|

||||

- Bitcoin: 1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX (Рекомендуем)

|

||||

- LiberaPay: https://liberapay.com/PramUkesh

|

||||

- Paypal: https://paypal.me/PramUkesh

|

||||

- Другие способы: [Donate](!https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/#help-to-keep-zeronet-development-alive)

|

||||

|

||||

#### Спасибо!

|

||||

|

||||

* Больше информации, помощь, журнал изменений, zeronet сайты: https://www.reddit.com/r/zeronet/

|

||||

* Приходите, пообщайтесь с нами: [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) или на [gitter](https://gitter.im/HelloZeroNet/ZeroNet)

|

||||

* Email: hello@zeronet.io (PGP: CB9613AE)

|

||||

- Здесь вы можете получить больше информации, помощь, прочитать список изменений и исследовать ZeroNet сайты: https://www.reddit.com/r/zeronetx/

|

||||

- Общение происходит на канале [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) или в [Gitter](https://gitter.im/canewsin/ZeroNet)

|

||||

- Электронная почта: canews.in@gmail.com

|

||||

|

|

|

|||

|

|

@ -1,8 +1,8 @@

|

|||

# ZeroNet [](https://travis-ci.org/HelloZeroNet/ZeroNet) [](https://zeronet.io/docs/faq/) [](https://zeronet.io/docs/help_zeronet/donate/)

|

||||

# ZeroNet [](https://github.com/ZeroNetX/ZeroNet/actions/workflows/tests.yml) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/) [](https://hub.docker.com/r/canewsin/zeronet)

|

||||

|

||||

[English](./README.md)

|

||||

|

||||

使用 Bitcoin 加密和 BitTorrent 网络的去中心化网络 - https://zeronet.io

|

||||

使用 Bitcoin 加密和 BitTorrent 网络的去中心化网络 - https://zeronet.dev

|

||||

|

||||

|

||||

## 为什么?

|

||||

|

|

@ -33,7 +33,7 @@

|

|||

|

||||

* 在运行 `zeronet.py` 后,您将可以通过

|

||||

`http://127.0.0.1:43110/{zeronet_address}`(例如:

|

||||

`http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D`)访问 zeronet 中的站点

|

||||

`http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d`)访问 zeronet 中的站点

|

||||

* 在您浏览 zeronet 站点时,客户端会尝试通过 BitTorrent 网络来寻找可用的节点,从而下载需要的文件(html,css,js...)

|

||||

* 您将会储存每一个浏览过的站点

|

||||

* 每个站点都包含一个名为 `content.json` 的文件,它储存了其他所有文件的 sha512 散列值以及一个通过站点私钥生成的签名

|

||||

|

|

@ -41,9 +41,9 @@

|

|||

那么这些节点将会在使用签名验证 `content.json` 的真实性后,下载修改后的文件并将新内容推送至另外的节点

|

||||

|

||||

#### [关于 ZeroNet 加密,站点更新,多用户站点的幻灯片 »](https://docs.google.com/presentation/d/1_2qK1IuOKJ51pgBvllZ9Yu7Au2l551t3XBgyTSvilew/pub?start=false&loop=false&delayms=3000)

|

||||

#### [常见问题 »](https://zeronet.io/docs/faq/)

|

||||

#### [常见问题 »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/)

|

||||

|

||||

#### [ZeroNet 开发者文档 »](https://zeronet.io/docs/site_development/getting_started/)

|

||||

#### [ZeroNet 开发者文档 »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

|

||||

|

||||

|

||||

## 屏幕截图

|

||||

|

|

@ -51,28 +51,28 @@

|

|||

|

||||

|

||||

|

||||

#### [ZeroNet 文档中的更多屏幕截图 »](https://zeronet.io/docs/using_zeronet/sample_sites/)

|

||||

#### [ZeroNet 文档中的更多屏幕截图 »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/using_zeronet/sample_sites/)

|

||||

|

||||

|

||||

## 如何加入

|

||||

|

||||

### Windows

|

||||

|

||||

- 下载 [ZeroNet-py3-win64.zip](https://github.com/HelloZeroNet/ZeroNet-win/archive/dist-win64/ZeroNet-py3-win64.zip) (18MB)

|

||||

- 下载 [ZeroNet-win.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-win.zip) (26MB)

|

||||

- 在任意位置解压缩

|

||||

- 运行 `ZeroNet.exe`

|

||||

|

||||

### macOS

|

||||

|

||||

- 下载 [ZeroNet-dist-mac.zip](https://github.com/HelloZeroNet/ZeroNet-dist/archive/mac/ZeroNet-dist-mac.zip) (13.2MB)

|

||||

- 下载 [ZeroNet-mac.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-mac.zip) (14MB)

|

||||

- 在任意位置解压缩

|

||||

- 运行 `ZeroNet.app`

|

||||

|

||||

### Linux (x86-64bit)

|

||||

|

||||

- `wget https://github.com/HelloZeroNet/ZeroNet-linux/archive/dist-linux64/ZeroNet-py3-linux64.tar.gz`

|

||||

- `tar xvpfz ZeroNet-py3-linux64.tar.gz`

|

||||

- `cd ZeroNet-linux-dist-linux64/`

|

||||

- `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-linux.zip`

|

||||

- `unzip ZeroNet-linux.zip`

|

||||

- `cd ZeroNet-linux`

|

||||

- 使用以下命令启动 `./ZeroNet.sh`

|

||||

- 在浏览器打开 http://127.0.0.1:43110/ 即可访问 ZeroHello 页面

|

||||

|

||||

|

|

@ -80,44 +80,53 @@

|

|||

|

||||

### 从源代码安装

|

||||

|

||||

- `wget https://github.com/HelloZeroNet/ZeroNet/archive/py3/ZeroNet-py3.tar.gz`

|

||||

- `tar xvpfz ZeroNet-py3.tar.gz`

|

||||

- `cd ZeroNet-py3`

|

||||

- `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-src.zip`

|

||||

- `unzip ZeroNet-src.zip`

|

||||

- `cd ZeroNet`

|

||||

- `sudo apt-get update`

|

||||

- `sudo apt-get install python3-pip`

|

||||

- `sudo python3 -m pip install -r requirements.txt`

|

||||

- 使用以下命令启动 `python3 zeronet.py`

|

||||

- 在浏览器打开 http://127.0.0.1:43110/ 即可访问 ZeroHello 页面

|

||||

|

||||

### Android (arm, arm64, x86)

|

||||

- minimum Android version supported 21 (Android 5.0 Lollipop)

|

||||

- [<img src="https://play.google.com/intl/en_us/badges/images/generic/en_badge_web_generic.png"

|

||||

alt="Download from Google Play"

|

||||

height="80">](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

|

||||

- APK download: https://github.com/canewsin/zeronet_mobile/releases

|

||||

|

||||

### Android (arm, arm64, x86) Thin Client for Preview Only (Size 1MB)

|

||||

- minimum Android version supported 16 (JellyBean)

|

||||

- [<img src="https://play.google.com/intl/en_us/badges/images/generic/en_badge_web_generic.png"

|

||||

alt="Download from Google Play"

|

||||

height="80">](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

|

||||

|

||||

## 现有限制

|

||||

|

||||

* ~~没有类似于 torrent 的文件拆分来支持大文件~~ (已添加大文件支持)

|

||||

* ~~没有比 BitTorrent 更好的匿名性~~ (已添加内置的完整 Tor 支持)

|

||||

* 传输文件时没有压缩~~和加密~~ (已添加 TLS 支持)

|

||||

* 传输文件时没有压缩

|

||||

* 不支持私有站点

|

||||

|

||||

|

||||

## 如何创建一个 ZeroNet 站点?

|

||||

|

||||

* 点击 [ZeroHello](http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D) 站点的 **⋮** > **「新建空站点」** 菜单项

|

||||

* 点击 [ZeroHello](http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d) 站点的 **⋮** > **「新建空站点」** 菜单项

|

||||

* 您将被**重定向**到一个全新的站点,该站点只能由您修改

|

||||

* 您可以在 **data/[您的站点地址]** 目录中找到并修改网站的内容

|

||||

* 修改后打开您的网站,将右上角的「0」按钮拖到左侧,然后点击底部的**签名**并**发布**按钮

|

||||

|

||||

接下来的步骤:[ZeroNet 开发者文档](https://zeronet.io/docs/site_development/getting_started/)

|

||||

接下来的步骤:[ZeroNet 开发者文档](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

|

||||

|

||||

## 帮助这个项目

|

||||

- Bitcoin: 1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX (Preferred)

|

||||

- LiberaPay: https://liberapay.com/PramUkesh

|

||||

- Paypal: https://paypal.me/PramUkesh

|

||||

- Others: [Donate](!https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/#help-to-keep-zeronet-development-alive)

|

||||

|

||||

- Bitcoin: 1QDhxQ6PraUZa21ET5fYUCPgdrwBomnFgX

|

||||

- Paypal: https://zeronet.io/docs/help_zeronet/donate/

|

||||

|

||||

### 赞助商

|

||||

|

||||

* [BrowserStack.com](https://www.browserstack.com) 使更好的 macOS/Safari 兼容性成为可能

|

||||

|

||||

#### 感谢您!

|

||||

|

||||

* 更多信息,帮助,变更记录和 zeronet 站点:https://www.reddit.com/r/zeronet/

|

||||

* 前往 [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) 或 [gitter](https://gitter.im/HelloZeroNet/ZeroNet) 和我们聊天

|

||||

* [这里](https://gitter.im/ZeroNet-zh/Lobby)是一个 gitter 上的中文聊天室

|

||||

* Email: hello@zeronet.io (PGP: [960F FF2D 6C14 5AA6 13E8 491B 5B63 BAE6 CB96 13AE](https://zeronet.io/files/tamas@zeronet.io_pub.asc))

|

||||

* 更多信息,帮助,变更记录和 zeronet 站点:https://www.reddit.com/r/zeronetx/

|

||||

* 前往 [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) 或 [gitter](https://gitter.im/canewsin/ZeroNet) 和我们聊天

|

||||

* [这里](https://gitter.im/canewsin/ZeroNet)是一个 gitter 上的中文聊天室

|

||||

* Email: canews.in@gmail.com

|

||||

|

|

|

|||

89

README.md

89

README.md

|

|

@ -1,6 +1,6 @@

|

|||

# ZeroNet [](https://travis-ci.org/HelloZeroNet/ZeroNet) [](https://zeronet.io/docs/faq/) [](https://zeronet.io/docs/help_zeronet/donate/)  [](https://hub.docker.com/r/nofish/zeronet)

|

||||

|

||||

Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.io / [onion](http://zeronet34m3r5ngdu54uj57dcafpgdjhxsgq5kla5con4qvcmfzpvhad.onion)

|

||||

# ZeroNet [](https://github.com/ZeroNetX/ZeroNet/actions/workflows/tests.yml) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/) [](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/) [](https://hub.docker.com/r/canewsin/zeronet)

|

||||

<!--TODO: Update Onion Site -->

|

||||

Decentralized websites using Bitcoin crypto and the BitTorrent network - https://zeronet.dev / [ZeroNet Site](http://127.0.0.1:43110/1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX/), Unlike Bitcoin, ZeroNet Doesn't need a blockchain to run, But uses cryptography used by BTC, to ensure data integrity and validation.

|

||||

|

||||

|

||||

## Why?

|

||||

|

|

@ -33,22 +33,22 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

|

|||

|

||||

* After starting `zeronet.py` you will be able to visit zeronet sites using

|

||||

`http://127.0.0.1:43110/{zeronet_address}` (eg.

|

||||

`http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D`).

|

||||

`http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d`).

|

||||

* When you visit a new zeronet site, it tries to find peers using the BitTorrent

|

||||

network so it can download the site files (html, css, js...) from them.

|

||||

* Each visited site is also served by you.

|

||||

* Every site contains a `content.json` file which holds all other files in a sha512 hash

|

||||

and a signature generated using the site's private key.

|

||||

* If the site owner (who has the private key for the site address) modifies the

|

||||

site, then he/she signs the new `content.json` and publishes it to the peers.

|

||||

site and signs the new `content.json` and publishes it to the peers.

|

||||

Afterwards, the peers verify the `content.json` integrity (using the

|

||||

signature), they download the modified files and publish the new content to

|

||||

other peers.

|

||||

|

||||

#### [Slideshow about ZeroNet cryptography, site updates, multi-user sites »](https://docs.google.com/presentation/d/1_2qK1IuOKJ51pgBvllZ9Yu7Au2l551t3XBgyTSvilew/pub?start=false&loop=false&delayms=3000)

|

||||

#### [Frequently asked questions »](https://zeronet.io/docs/faq/)

|

||||

#### [Frequently asked questions »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/faq/)

|

||||

|

||||

#### [ZeroNet Developer Documentation »](https://zeronet.io/docs/site_development/getting_started/)

|

||||

#### [ZeroNet Developer Documentation »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

|

||||

|

||||

|

||||

## Screenshots

|

||||

|

|

@ -56,48 +56,72 @@ Decentralized websites using Bitcoin crypto and the BitTorrent network - https:/

|

|||

|

||||

|

||||

|

||||

#### [More screenshots in ZeroNet docs »](https://zeronet.io/docs/using_zeronet/sample_sites/)

|

||||

#### [More screenshots in ZeroNet docs »](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/using_zeronet/sample_sites/)

|

||||

|

||||

|

||||

## How to join

|

||||

|

||||

### Windows

|

||||

|

||||

- Download [ZeroNet-py3-win64.zip](https://github.com/HelloZeroNet/ZeroNet-win/archive/dist-win64/ZeroNet-py3-win64.zip) (18MB)

|

||||

- Download [ZeroNet-win.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-win.zip) (26MB)

|

||||

- Unpack anywhere

|

||||

- Run `ZeroNet.exe`

|

||||

|

||||

### macOS

|

||||

|

||||

- Download [ZeroNet-dist-mac.zip](https://github.com/HelloZeroNet/ZeroNet-dist/archive/mac/ZeroNet-dist-mac.zip) (13.2MB)

|

||||

- Download [ZeroNet-mac.zip](https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-mac.zip) (14MB)

|

||||

- Unpack anywhere

|

||||

- Run `ZeroNet.app`

|

||||

|

||||

### Linux (x86-64bit)

|

||||

- `wget https://github.com/HelloZeroNet/ZeroNet-linux/archive/dist-linux64/ZeroNet-py3-linux64.tar.gz`

|

||||

- `tar xvpfz ZeroNet-py3-linux64.tar.gz`

|

||||

- `cd ZeroNet-linux-dist-linux64/`

|

||||

- `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-linux.zip`

|

||||

- `unzip ZeroNet-linux.zip`

|

||||

- `cd ZeroNet-linux`

|

||||

- Start with: `./ZeroNet.sh`

|

||||

- Open the ZeroHello landing page in your browser by navigating to: http://127.0.0.1:43110/

|

||||

|

||||

__Tip:__ Start with `./ZeroNet.sh --ui_ip '*' --ui_restrict your.ip.address` to allow remote connections on the web interface.

|

||||

|

||||

### Android (arm, arm64, x86)

|

||||

- minimum Android version supported 16 (JellyBean)

|

||||

- minimum Android version supported 21 (Android 5.0 Lollipop)

|

||||

- [<img src="https://play.google.com/intl/en_us/badges/images/generic/en_badge_web_generic.png"

|

||||

alt="Download from Google Play"

|

||||

height="80">](https://play.google.com/store/apps/details?id=in.canews.zeronetmobile)

|

||||

- APK download: https://github.com/canewsin/zeronet_mobile/releases

|

||||

- XDA Labs: https://labs.xda-developers.com/store/app/in.canews.zeronet

|

||||

|

||||

|

||||

### Android (arm, arm64, x86) Thin Client for Preview Only (Size 1MB)

|

||||

- minimum Android version supported 16 (JellyBean)

|

||||

- [<img src="https://play.google.com/intl/en_us/badges/images/generic/en_badge_web_generic.png"

|

||||

alt="Download from Google Play"

|

||||

height="80">](https://play.google.com/store/apps/details?id=dev.zeronetx.app.lite)

|

||||

|

||||

|

||||

#### Docker

|

||||

There is an official image, built from source at: https://hub.docker.com/r/nofish/zeronet/

|

||||

There is an official image, built from source at: https://hub.docker.com/r/canewsin/zeronet/

|

||||

|

||||

### Online Proxies

|

||||

Proxies are like seed boxes for sites(i.e ZNX runs on a cloud vps), you can try zeronet experience from proxies. Add your proxy below if you have one.

|

||||

|

||||

#### Official ZNX Proxy :

|

||||

|

||||

https://proxy.zeronet.dev/

|

||||

|

||||

https://zeronet.dev/

|

||||

|

||||

#### From Community

|

||||

|

||||

https://0net-preview.com/

|

||||

|

||||

https://portal.ngnoid.tv/

|

||||

|

||||

https://zeronet.ipfsscan.io/

|

||||

|

||||

|

||||

### Install from source

|

||||

|

||||

- `wget https://github.com/HelloZeroNet/ZeroNet/archive/py3/ZeroNet-py3.tar.gz`

|

||||

- `tar xvpfz ZeroNet-py3.tar.gz`

|

||||

- `cd ZeroNet-py3`

|

||||

- `wget https://github.com/ZeroNetX/ZeroNet/releases/latest/download/ZeroNet-src.zip`

|

||||

- `unzip ZeroNet-src.zip`

|

||||

- `cd ZeroNet`

|

||||

- `sudo apt-get update`

|

||||

- `sudo apt-get install python3-pip`

|

||||

- `sudo python3 -m pip install -r requirements.txt`

|

||||

|

|

@ -106,32 +130,27 @@ There is an official image, built from source at: https://hub.docker.com/r/nofis

|

|||

|

||||

## Current limitations

|

||||

|

||||

* ~~No torrent-like file splitting for big file support~~ (big file support added)

|

||||

* ~~No more anonymous than Bittorrent~~ (built-in full Tor support added)

|

||||

* File transactions are not compressed ~~or encrypted yet~~ (TLS encryption added)

|

||||

* File transactions are not compressed

|

||||

* No private sites

|

||||

|

||||

|

||||

## How can I create a ZeroNet site?

|

||||

|

||||

* Click on **⋮** > **"Create new, empty site"** menu item on the site [ZeroHello](http://127.0.0.1:43110/1HeLLo4uzjaLetFx6NH3PMwFP3qbRbTf3D).

|

||||

* Click on **⋮** > **"Create new, empty site"** menu item on the site [ZeroHello](http://127.0.0.1:43110/1HELLoE3sFD9569CLCbHEAVqvqV7U2Ri9d).

|

||||

* You will be **redirected** to a completely new site that is only modifiable by you!

|

||||

* You can find and modify your site's content in **data/[yoursiteaddress]** directory

|

||||

* After the modifications open your site, drag the topright "0" button to left, then press **sign** and **publish** buttons on the bottom

|

||||

|

||||

Next steps: [ZeroNet Developer Documentation](https://zeronet.io/docs/site_development/getting_started/)

|

||||

Next steps: [ZeroNet Developer Documentation](https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/site_development/getting_started/)

|

||||

|

||||

## Help keep this project alive

|

||||

|

||||

- Bitcoin: 1QDhxQ6PraUZa21ET5fYUCPgdrwBomnFgX

|

||||

- Paypal: https://zeronet.io/docs/help_zeronet/donate/

|

||||

|

||||

### Sponsors

|

||||

|

||||

* Better macOS/Safari compatibility made possible by [BrowserStack.com](https://www.browserstack.com)

|

||||

- Bitcoin: 1ZeroNetyV5mKY9JF1gsm82TuBXHpfdLX (Preferred)

|

||||

- LiberaPay: https://liberapay.com/PramUkesh

|

||||

- Paypal: https://paypal.me/PramUkesh

|

||||

- Others: [Donate](!https://docs.zeronet.dev/1DeveLopDZL1cHfKi8UXHh2UBEhzH6HhMp/help_zeronet/donate/#help-to-keep-zeronet-development-alive)

|

||||

|

||||

#### Thank you!

|

||||

|

||||

* More info, help, changelog, zeronet sites: https://www.reddit.com/r/zeronet/

|

||||

* Come, chat with us: [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) or on [gitter](https://gitter.im/HelloZeroNet/ZeroNet)

|

||||

* Email: hello@zeronet.io (PGP: [960F FF2D 6C14 5AA6 13E8 491B 5B63 BAE6 CB96 13AE](https://zeronet.io/files/tamas@zeronet.io_pub.asc))

|

||||

* More info, help, changelog, zeronet sites: https://www.reddit.com/r/zeronetx/

|

||||

* Come, chat with us: [#zeronet @ FreeNode](https://kiwiirc.com/client/irc.freenode.net/zeronet) or on [gitter](https://gitter.im/canewsin/ZeroNet)

|

||||

* Email: canews.in@gmail.com

|

||||

|

|

|

|||

|

|

@ -0,0 +1 @@

|

|||

Subproject commit 689d9309f73371f4681191b125ec3f2e14075eeb

|

||||

|

|

@ -1,148 +0,0 @@

|

|||

import time

|

||||

import urllib.request

|

||||

import struct

|

||||

import socket

|

||||

|

||||

import lib.bencode_open as bencode_open

|

||||

from lib.subtl.subtl import UdpTrackerClient

|

||||

import socks

|

||||

import sockshandler

|

||||

import gevent

|

||||

|

||||

from Plugin import PluginManager

|

||||

from Config import config

|

||||

from Debug import Debug

|

||||

from util import helper

|

||||

|

||||

|

||||

# We can only import plugin host clases after the plugins are loaded

|

||||

@PluginManager.afterLoad

|

||||

def importHostClasses():

|

||||

global Peer, AnnounceError

|

||||

from Peer import Peer

|

||||

from Site.SiteAnnouncer import AnnounceError

|

||||

|

||||

|

||||

@PluginManager.registerTo("SiteAnnouncer")

|

||||

class SiteAnnouncerPlugin(object):

|

||||

def getSupportedTrackers(self):

|

||||

trackers = super(SiteAnnouncerPlugin, self).getSupportedTrackers()

|

||||

if config.disable_udp or config.trackers_proxy != "disable":

|

||||

trackers = [tracker for tracker in trackers if not tracker.startswith("udp://")]

|

||||

|

||||

return trackers

|

||||

|

||||

def getTrackerHandler(self, protocol):

|

||||

if protocol == "udp":

|

||||

handler = self.announceTrackerUdp

|

||||

elif protocol == "http":

|

||||

handler = self.announceTrackerHttp

|

||||

elif protocol == "https":

|

||||

handler = self.announceTrackerHttps

|

||||

else:

|

||||

handler = super(SiteAnnouncerPlugin, self).getTrackerHandler(protocol)

|

||||

return handler

|

||||

|

||||

def announceTrackerUdp(self, tracker_address, mode="start", num_want=10):

|

||||

s = time.time()

|

||||

if config.disable_udp:

|

||||

raise AnnounceError("Udp disabled by config")

|

||||

if config.trackers_proxy != "disable":

|

||||

raise AnnounceError("Udp trackers not available with proxies")

|

||||

|

||||

ip, port = tracker_address.split("/")[0].split(":")

|

||||

tracker = UdpTrackerClient(ip, int(port))

|

||||

if helper.getIpType(ip) in self.getOpenedServiceTypes():

|

||||

tracker.peer_port = self.fileserver_port

|

||||

else:

|

||||

tracker.peer_port = 0

|

||||

tracker.connect()

|

||||

if not tracker.poll_once():

|

||||

raise AnnounceError("Could not connect")

|

||||

tracker.announce(info_hash=self.site.address_sha1, num_want=num_want, left=431102370)

|

||||

back = tracker.poll_once()

|

||||

if not back:

|

||||

raise AnnounceError("No response after %.0fs" % (time.time() - s))

|

||||

elif type(back) is dict and "response" in back:

|

||||

peers = back["response"]["peers"]

|

||||

else:

|

||||

raise AnnounceError("Invalid response: %r" % back)

|

||||

|

||||

return peers

|

||||

|

||||

def httpRequest(self, url):

|

||||

headers = {

|

||||

'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.11 (KHTML, like Gecko) Chrome/23.0.1271.64 Safari/537.11',

|

||||

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

|

||||

'Accept-Charset': 'ISO-8859-1,utf-8;q=0.7,*;q=0.3',

|

||||

'Accept-Encoding': 'none',

|

||||

'Accept-Language': 'en-US,en;q=0.8',

|

||||

'Connection': 'keep-alive'

|

||||

}

|

||||

|

||||

req = urllib.request.Request(url, headers=headers)

|

||||

|

||||

if config.trackers_proxy == "tor":

|

||||

tor_manager = self.site.connection_server.tor_manager

|

||||

handler = sockshandler.SocksiPyHandler(socks.SOCKS5, tor_manager.proxy_ip, tor_manager.proxy_port)

|

||||

opener = urllib.request.build_opener(handler)

|

||||

return opener.open(req, timeout=50)

|

||||

elif config.trackers_proxy == "disable":

|

||||

return urllib.request.urlopen(req, timeout=25)

|

||||

else:

|

||||

proxy_ip, proxy_port = config.trackers_proxy.split(":")

|

||||

handler = sockshandler.SocksiPyHandler(socks.SOCKS5, proxy_ip, int(proxy_port))

|

||||

opener = urllib.request.build_opener(handler)

|

||||

return opener.open(req, timeout=50)

|

||||

|

||||

def announceTrackerHttps(self, *args, **kwargs):

|

||||

kwargs["protocol"] = "https"

|

||||

return self.announceTrackerHttp(*args, **kwargs)

|

||||

|

||||

def announceTrackerHttp(self, tracker_address, mode="start", num_want=10, protocol="http"):

|

||||

tracker_ip, tracker_port = tracker_address.rsplit(":", 1)

|

||||

if helper.getIpType(tracker_ip) in self.getOpenedServiceTypes():

|

||||

port = self.fileserver_port

|

||||

else:

|

||||

port = 1

|

||||

params = {

|

||||

'info_hash': self.site.address_sha1,

|

||||

'peer_id': self.peer_id, 'port': port,

|

||||

'uploaded': 0, 'downloaded': 0, 'left': 431102370, 'compact': 1, 'numwant': num_want,

|

||||

'event': 'started'

|

||||

}

|

||||

|

||||

url = protocol + "://" + tracker_address + "?" + urllib.parse.urlencode(params)

|

||||

|

||||

s = time.time()

|

||||

response = None

|

||||

# Load url

|

||||

if config.tor == "always" or config.trackers_proxy != "disable":

|

||||

timeout = 60

|

||||

else:

|

||||

timeout = 30

|

||||

|

||||

with gevent.Timeout(timeout, False): # Make sure of timeout

|

||||

req = self.httpRequest(url)

|

||||

response = req.read()

|

||||

req.close()

|

||||

req = None

|

||||

|

||||

if not response:

|

||||

raise AnnounceError("No response after %.0fs" % (time.time() - s))

|

||||

|

||||

# Decode peers

|

||||

try:

|

||||

peer_data = bencode_open.loads(response)[b"peers"]

|

||||

response = None

|

||||

peer_count = int(len(peer_data) / 6)

|

||||

peers = []

|

||||

for peer_offset in range(peer_count):

|

||||

off = 6 * peer_offset

|

||||

peer = peer_data[off:off + 6]

|

||||

addr, port = struct.unpack('!LH', peer)

|

||||

peers.append({"addr": socket.inet_ntoa(struct.pack('!L', addr)), "port": port})

|

||||

except Exception as err:

|

||||

raise AnnounceError("Invalid response: %r (%s)" % (response, Debug.formatException(err)))

|

||||

|

||||

return peers

|

||||

|

|

@ -1 +0,0 @@

|

|||

from . import AnnounceBitTorrentPlugin

|

||||

|

|

@ -1,5 +0,0 @@

|

|||

{

|

||||

"name": "AnnounceBitTorrent",

|

||||

"description": "Discover new peers using BitTorrent trackers.",

|

||||

"default": "enabled"

|

||||

}

|

||||

|

|

@ -1,147 +0,0 @@

|

|||

import time

|

||||

|

||||

import gevent

|

||||

|

||||

from Plugin import PluginManager

|

||||

from Config import config

|

||||

from . import BroadcastServer

|

||||

|

||||

|

||||

@PluginManager.registerTo("SiteAnnouncer")

|

||||

class SiteAnnouncerPlugin(object):

|

||||

def announce(self, force=False, *args, **kwargs):

|

||||

local_announcer = self.site.connection_server.local_announcer

|

||||

|

||||

thread = None

|

||||

if local_announcer and (force or time.time() - local_announcer.last_discover > 5 * 60):

|

||||

thread = gevent.spawn(local_announcer.discover, force=force)

|

||||

back = super(SiteAnnouncerPlugin, self).announce(force=force, *args, **kwargs)

|

||||

|

||||

if thread:

|

||||

thread.join()

|

||||

|

||||

return back

|

||||

|

||||

|

||||

class LocalAnnouncer(BroadcastServer.BroadcastServer):

|

||||

def __init__(self, server, listen_port):

|

||||

super(LocalAnnouncer, self).__init__("zeronet", listen_port=listen_port)

|

||||

self.server = server

|

||||

|

||||

self.sender_info["peer_id"] = self.server.peer_id

|

||||

self.sender_info["port"] = self.server.port

|

||||

self.sender_info["broadcast_port"] = listen_port

|

||||

self.sender_info["rev"] = config.rev

|

||||

|

||||

self.known_peers = {}

|

||||

self.last_discover = 0

|

||||

|

||||

def discover(self, force=False):

|

||||

self.log.debug("Sending discover request (force: %s)" % force)

|

||||

self.last_discover = time.time()

|

||||

if force: # Probably new site added, clean cache

|

||||

self.known_peers = {}

|

||||

|

||||

for peer_id, known_peer in list(self.known_peers.items()):

|

||||

if time.time() - known_peer["found"] > 20 * 60:

|

||||

del(self.known_peers[peer_id])

|

||||

self.log.debug("Timeout, removing from known_peers: %s" % peer_id)

|

||||

self.broadcast({"cmd": "discoverRequest", "params": {}}, port=self.listen_port)

|

||||

|

||||

def actionDiscoverRequest(self, sender, params):

|

||||

back = {

|

||||

"cmd": "discoverResponse",

|

||||

"params": {

|

||||

"sites_changed": self.server.site_manager.sites_changed

|

||||

}

|

||||

}

|

||||

|

||||

if sender["peer_id"] not in self.known_peers:

|

||||

self.known_peers[sender["peer_id"]] = {"added": time.time(), "sites_changed": 0, "updated": 0, "found": time.time()}

|

||||

self.log.debug("Got discover request from unknown peer %s (%s), time to refresh known peers" % (sender["ip"], sender["peer_id"]))

|

||||

gevent.spawn_later(1.0, self.discover) # Let the response arrive first to the requester

|

||||

|

||||

return back

|

||||

|

||||

def actionDiscoverResponse(self, sender, params):

|

||||

if sender["peer_id"] in self.known_peers:

|

||||

self.known_peers[sender["peer_id"]]["found"] = time.time()

|

||||

if params["sites_changed"] != self.known_peers.get(sender["peer_id"], {}).get("sites_changed"):

|

||||

# Peer's site list changed, request the list of new sites

|

||||

return {"cmd": "siteListRequest"}

|

||||

else:

|

||||

# Peer's site list is the same

|

||||

for site in self.server.sites.values():

|

||||

peer = site.peers.get("%s:%s" % (sender["ip"], sender["port"]))

|

||||

if peer:

|

||||

peer.found("local")

|

||||

|

||||

def actionSiteListRequest(self, sender, params):

|

||||

back = []

|

||||

sites = list(self.server.sites.values())

|

||||

|

||||

# Split adresses to group of 100 to avoid UDP size limit

|

||||

site_groups = [sites[i:i + 100] for i in range(0, len(sites), 100)]

|

||||

for site_group in site_groups:

|

||||

res = {}

|

||||

res["sites_changed"] = self.server.site_manager.sites_changed

|

||||

res["sites"] = [site.address_hash for site in site_group]

|

||||

back.append({"cmd": "siteListResponse", "params": res})

|

||||

return back

|

||||

|

||||

def actionSiteListResponse(self, sender, params):

|

||||

s = time.time()

|

||||

peer_sites = set(params["sites"])

|

||||

num_found = 0

|

||||

added_sites = []

|

||||

for site in self.server.sites.values():

|

||||

if site.address_hash in peer_sites:

|

||||

added = site.addPeer(sender["ip"], sender["port"], source="local")

|

||||

num_found += 1

|

||||

if added:

|

||||

site.worker_manager.onPeers()

|

||||

site.updateWebsocket(peers_added=1)

|

||||

added_sites.append(site)

|

||||

|

||||

# Save sites changed value to avoid unnecessary site list download

|

||||

if sender["peer_id"] not in self.known_peers:

|

||||

self.known_peers[sender["peer_id"]] = {"added": time.time()}

|

||||

|

||||