url

This commit is contained in:

parent

a1f10af609

commit

0038cb2283

|

|

@ -1,10 +1,11 @@

|

|||

# MLOps course DeepLearning.ai

|

||||

|

||||

|

||||

> MLOps is an ML engineering culture and practice that aims at unifying ML development (Dev) and ML operation (Ops)” [^1]

|

||||

|

||||

## Automation and Monitoring

|

||||

|

||||

at all steps of ML system construction, including:

|

||||

|

||||

- integration

|

||||

- testing

|

||||

- releasing

|

||||

|

|

@ -16,6 +17,7 @@ at all steps of ML system construction, including:

|

|||

|

||||

|

||||

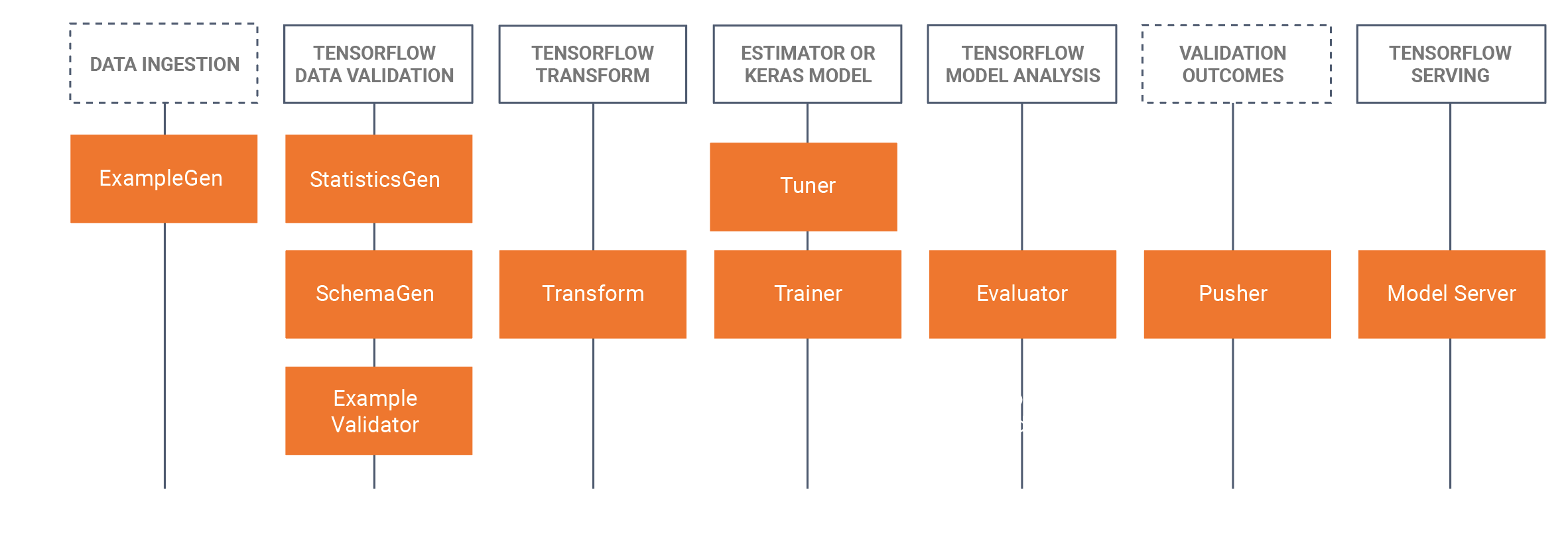

In this course only the following steps are explained:

|

||||

|

||||

- Data Transformation

|

||||

- Model

|

||||

- Serving

|

||||

|

|

@ -37,6 +39,7 @@ One model per use case (so one dimentional)

|

|||

|- evaluation generative output||

|

||||

|

||||

### What is Grounding?

|

||||

|

||||

Grounding is the process of using large language models (LLMs) with information that is use-case specific, relevant, and not available as part of the LLM's trained knowledge. [^2]

|

||||

|

||||

|

||||

|

|

@ -45,12 +48,14 @@ Grounding is the process of using large language models (LLMs) with information

|

|||

|

||||

|

||||

### LLMOps topics beyond the scope of this course

|

||||

|

||||

- Prompt design and prompt management

|

||||

- Model evaluation

|

||||

- Model monitoring

|

||||

- Testing

|

||||

|

||||

### File formats train and evaludation

|

||||

|

||||

- JSONL: JSON Lines (JSONL) is a simple text-based format with each question and answer on a row. It is human readable and an ideal choice for small to medium-sized datasets.

|

||||

- TFRecord: Binary format and easier to read for

|

||||

computers, making it ideal for efficient training.

|

||||

|

|

@ -58,12 +63,13 @@ computers, making it ideal for efficient training.

|

|||

datasets.

|

||||

|

||||

### Versioning artifacts

|

||||

|

||||

- Keep track of artifacts

|

||||

- versioning of data: from which data you generated the datafile => tracability

|

||||

- reproducecability, maintainability

|

||||

|

||||

|

||||

## MLOps workflows for LLMs

|

||||

|

||||

Orchestration: which step first, the next step etc

|

||||

Automation: the flow is automated

|

||||

Deployment: trake trained model and put in production

|

||||

|

|

@ -74,29 +80,17 @@ Tools for orchestration and automation: Airflow or Kubeflow [Kube for pipelines]

|

|||

**Reuse of pipelines is important!**

|

||||

|

||||

## Beyond deployment

|

||||

|

||||

- Package, Deploy and version.

|

||||

- Model monitoring: metrics and safety.

|

||||

- Inference scalability:

|

||||

- Load test

|

||||

- controlled roll out, etc.

|

||||

- Load test

|

||||

- controlled roll out, etc.

|

||||

- Latency: permissible latency.

|

||||

- Smaller models

|

||||

- Faster processors (GPUs, TPUs)

|

||||

- Deploy regionally

|

||||

- Smaller models

|

||||

- Faster processors (GPUs, TPUs)

|

||||

- Deploy regionally

|

||||

|

||||

[^1]: <https://cloud.google.com/blog/products/ai-machine-learning/key-requirements-for-an-mlops-foundation>

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

[^1]: https://cloud.google.com/blog/products/ai-machine-learning/key-requirements-for-an-mlops-foundation

|

||||

|

||||

[^2]: https://techcommunity.microsoft.com/t5/fasttrack-for-azure/grounding-llms/ba-p/3843857

|

||||

[^2]: <https://techcommunity.microsoft.com/t5/fasttrack-for-azure/grounding-llms/ba-p/3843857>

|

||||

|

|

|

|||

|

|

@ -0,0 +1,71 @@

|

|||

Neural network architectures

|

||||

|

||||

Let's dive into the fascinating world of Neural Network Architectures! If you're a GenAI enthusiast, GenAI engineer, AI engineer, architect, CXO, data scientist, data engineer, or a solution architect, you're in the right place.

|

||||

|

||||

Imagine neural networks as the backbone of artificial intelligence. They're the brains behind the magic that powers everything from your voice assistants to autonomous cars. Think of them as the building blocks of AI, just like Lego pieces that can be put together in countless ways to create something amazing.

|

||||

|

||||

At the heart of a neural network is the neuron, which mimics the behavior of a biological neuron in your brain. These neurons process and transmit information. In a neural network, we organize these neurons into layers, just like how the neurons in your brain work together in different regions.

|

||||

|

||||

Let's break down some of the most popular neural network architectures:

|

||||

|

||||

1. Feedforward Neural Networks (FFNN):

|

||||

|

||||

Imagine FFNNs as a straight highway with multiple lanes. Data goes in one direction, from input to output, without any loops or detours.

|

||||

|

||||

They are excellent for tasks like image classification. Think about teaching a computer to recognize cats from dogs in pictures.

|

||||

|

||||

2. Convolutional Neural Networks (CNN):

|

||||

|

||||

CNNs are like detectives searching for patterns in images. They're amazing at image-related tasks.

|

||||

|

||||

Imagine you have a jigsaw puzzle, and CNNs help you piece together the big picture by examining each small part.

|

||||

|

||||

3. Recurrent Neural Networks (RNN):

|

||||

|

||||

RNNs are all about sequences. They remember what came before and use that knowledge to make predictions.

|

||||

|

||||

Think of RNNs as a storyteller who remembers the entire plot to tell you what happens next in a book or movie.

|

||||

|

||||

4. Long Short-Term Memory Networks (LSTM):

|

||||

|

||||

LSTMs are a special type of RNN. They have a fantastic memory and are great for tasks like language translation.

|

||||

|

||||

Picture LSTMs as your brain's ability to remember not just what happened last, but also what happened a while ago.

|

||||

|

||||

5. Gated Recurrent Unit (GRU):

|

||||

|

||||

GRUs are like simplified LSTMs. They're great for many of the same tasks but use fewer computational resources.

|

||||

|

||||

Imagine GRUs as a quicker thinker who still does an excellent job at predicting sequences.

|

||||

|

||||

6. Transformer Neural Networks:

|

||||

|

||||

Transformers are the cool kids on the block, known for their excellence in natural language processing tasks.

|

||||

|

||||

Think of them as the wizards of language, transforming text from one language to another seamlessly.

|

||||

|

||||

7. Autoencoders:

|

||||

|

||||

Autoencoders are like secret agents who learn to represent data efficiently. They're used in tasks like data compression and anomaly detection.

|

||||

|

||||

Picture autoencoders as experts in storing information compactly.

|

||||

|

||||

8. Generative Adversarial Networks (GANs):

|

||||

|

||||

GANs are a bit like artists. They create new things by pitting two networks against each other—one trying to create something, and the other trying to spot the fake.

|

||||

|

||||

Think of GANs as a forger and an art critic working together to produce incredible artwork.

|

||||

|

||||

9. Self-Attention Mechanisms:

|

||||

|

||||

Self-attention is like giving more weight to important words in a sentence. It's the key ingredient in Transformers.

|

||||

|

||||

Imagine you're reading a book, and your attention naturally focuses more on crucial words.

|

||||

|

||||

Each of these architectures serves a unique purpose, and sometimes they even work together like a team of superheroes with different powers. For example, you can use a CNN to identify objects in an image and then use an RNN to understand the context of those objects in a sequence.

|

||||

|

||||

So, why does this matter to you? Whether you're an enthusiast eager to understand the magic behind AI or a seasoned engineer crafting the next generation of intelligent systems, knowing these neural network architectures is crucial. It's like having a toolkit filled with different tools for different jobs.

|

||||

|

||||

You'll also appreciate that choosing the right architecture can significantly impact your business's AI strategy. For data scientists and engineers, it's about picking the right tool to solve a particular problem efficiently. And for solution architects, it's about designing systems that leverage these architectures effectively.

|

||||

|

||||

In the world of AI, neural network architectures are your Swiss Army knife, ready to tackle the challenges of tomorrow. So, whether you're building a self-driving car, revolutionizing healthcare, or just exploring the limitless possibilities of AI, these architectures are your trusty companions on the journey into the future.

|

||||

|

|

@ -1,24 +1,32 @@

|

|||

from datetime import datetime

|

||||

|

||||

# https://www.pg4e.com/code/datecompat.py

|

||||

|

||||

# Non-dateutil version - we try our best

|

||||

def parsemaildate(md) :

|

||||

|

||||

# Non-dateutil version - we try our best

|

||||

def parsemaildate(md):

|

||||

pieces = md.split()

|

||||

notz = " ".join(pieces[:4]).strip()

|

||||

|

||||

# Try a bunch of format variations - strptime() is *lame*

|

||||

dnotz = None

|

||||

for form in [ '%d %b %Y %H:%M:%S', '%d %b %Y %H:%M:%S',

|

||||

'%d %b %Y %H:%M', '%d %b %Y %H:%M', '%d %b %y %H:%M:%S',

|

||||

'%d %b %y %H:%M:%S', '%d %b %y %H:%M', '%d %b %y %H:%M' ] :

|

||||

for form in [

|

||||

"%d %b %Y %H:%M:%S",

|

||||

"%d %b %Y %H:%M:%S",

|

||||

"%d %b %Y %H:%M",

|

||||

"%d %b %Y %H:%M",

|

||||

"%d %b %y %H:%M:%S",

|

||||

"%d %b %y %H:%M:%S",

|

||||

"%d %b %y %H:%M",

|

||||

"%d %b %y %H:%M",

|

||||

]:

|

||||

try:

|

||||

dnotz = datetime.strptime(notz, form)

|

||||

break

|

||||

except:

|

||||

continue

|

||||

|

||||

if dnotz is None :

|

||||

if dnotz is None:

|

||||

# print 'Bad Date:',md

|

||||

return None

|

||||

|

||||

|

|

@ -27,13 +35,13 @@ def parsemaildate(md) :

|

|||

tz = "+0000"

|

||||

try:

|

||||

tz = pieces[4]

|

||||

ival = int(tz) # Only want numeric timezone values

|

||||

if tz == '-0000' : tz = '+0000'

|

||||

ival = int(tz) # Only want numeric timezone values

|

||||

if tz == "-0000":

|

||||

tz = "+0000"

|

||||

tzh = tz[:3]

|

||||

tzm = tz[3:]

|

||||

tz = tzh+":"+tzm

|

||||

tz = tzh + ":" + tzm

|

||||

except:

|

||||

pass

|

||||

|

||||

return iso+tz

|

||||

|

||||

return iso + tz

|

||||

|

|

|

|||

|

|

@ -1,4 +1,3 @@

|

|||

|

||||

# https://www.pg4e.com/code/gmane.py

|

||||

# https://www.pg4e.com/code/datecompat.py

|

||||

# https://www.pg4e.com/code/myutils.py

|

||||

|

|

@ -18,9 +17,10 @@ import hidden

|

|||

import myutils

|

||||

import datecompat

|

||||

|

||||

import dateutil.parser as parser # If this import fails - just comment it out

|

||||

import dateutil.parser as parser # If this import fails - just comment it out

|

||||

|

||||

def parsemaildate(md) :

|

||||

|

||||

def parsemaildate(md):

|

||||

try:

|

||||

pdate = parser.parse(tdate)

|

||||

test_at = pdate.isoformat()

|

||||

|

|

@ -28,125 +28,146 @@ def parsemaildate(md) :

|

|||

except:

|

||||

return datecompat.parsemaildate(md)

|

||||

|

||||

|

||||

# secrets = hidden.secrets()

|

||||

secrets = hidden.local()

|

||||

|

||||

conn = psycopg2.connect(host=secrets['host'],port=secrets['port'], connect_timeout=5,

|

||||

database=secrets['database'], user=secrets['user'], password=secrets['pass'])

|

||||

conn = psycopg2.connect(

|

||||

host=secrets["host"],

|

||||

port=secrets["port"],

|

||||

connect_timeout=5,

|

||||

database=secrets["database"],

|

||||

user=secrets["user"],

|

||||

password=secrets["pass"],

|

||||

)

|

||||

cur = conn.cursor()

|

||||

|

||||

baseurl = 'http://mbox.dr-chuck.net/sakai.devel/'

|

||||

baseurl = "http://mbox.dr-chuck.net/sakai.devel/"

|

||||

|

||||

cur.execute('''CREATE TABLE IF NOT EXISTS messages

|

||||

cur.execute(

|

||||

"""CREATE TABLE IF NOT EXISTS messages

|

||||

(id SERIAL, email TEXT, sent_at TIMESTAMPTZ,

|

||||

subject TEXT, headers TEXT, body TEXT)''')

|

||||

subject TEXT, headers TEXT, body TEXT)"""

|

||||

)

|

||||

|

||||

# Pick up where we left off

|

||||

sql = 'SELECT max(id) FROM messages'

|

||||

sql = "SELECT max(id) FROM messages"

|

||||

start = myutils.queryValue(cur, sql)

|

||||

if start is None : start = 0

|

||||

if start is None:

|

||||

start = 0

|

||||

|

||||

many = 0

|

||||

count = 0

|

||||

fail = 0

|

||||

while True:

|

||||

if ( many < 1 ) :

|

||||

if many < 1:

|

||||

conn.commit()

|

||||

sval = input('How many messages:')

|

||||

if ( len(sval) < 1 ) : break

|

||||

sval = input("How many messages:")

|

||||

if len(sval) < 1:

|

||||

break

|

||||

many = int(sval)

|

||||

|

||||

start = start + 1

|

||||

|

||||

# Skip rows that are already retrieved

|

||||

sql = 'SELECT id FROM messages WHERE id=%s'

|

||||

row = myutils.queryValue(cur, sql, (start,) )

|

||||

if row is not None : continue # Skip rows that already exist

|

||||

sql = "SELECT id FROM messages WHERE id=%s"

|

||||

row = myutils.queryValue(cur, sql, (start,))

|

||||

if row is not None:

|

||||

continue # Skip rows that already exist

|

||||

|

||||

many = many - 1

|

||||

url = baseurl + str(start) + '/' + str(start + 1)

|

||||

url = baseurl + str(start) + "/" + str(start + 1)

|

||||

|

||||

text = 'None'

|

||||

text = "None"

|

||||

try:

|

||||

# Open with a timeout of 30 seconds

|

||||

response = requests.get(url)

|

||||

text = response.text

|

||||

status = response.status_code

|

||||

if status != 200 :

|

||||

print('Error code=',status, url)

|

||||

if status != 200:

|

||||

print("Error code=", status, url)

|

||||

break

|

||||

except KeyboardInterrupt:

|

||||

print('')

|

||||

print('Program interrupted by user...')

|

||||

print("")

|

||||

print("Program interrupted by user...")

|

||||

break

|

||||

except Exception as e:

|

||||

print('Unable to retrieve or parse page',url)

|

||||

print('Error',e)

|

||||

print("Unable to retrieve or parse page", url)

|

||||

print("Error", e)

|

||||

fail = fail + 1

|

||||

if fail > 5 : break

|

||||

if fail > 5:

|

||||

break

|

||||

continue

|

||||

|

||||

print(url,len(text))

|

||||

print(url, len(text))

|

||||

count = count + 1

|

||||

|

||||

if not text.startswith('From '):

|

||||

if not text.startswith("From "):

|

||||

print(text)

|

||||

print('Did not find From ')

|

||||

print("Did not find From ")

|

||||

fail = fail + 1

|

||||

if fail > 5 : break

|

||||

if fail > 5:

|

||||

break

|

||||

continue

|

||||

|

||||

pos = text.find('\n\n')

|

||||

if pos > 0 :

|

||||

pos = text.find("\n\n")

|

||||

if pos > 0:

|

||||

hdr = text[:pos]

|

||||

body = text[pos+2:]

|

||||

body = text[pos + 2 :]

|

||||

else:

|

||||

print(text)

|

||||

print('Could not find break between headers and body')

|

||||

print("Could not find break between headers and body")

|

||||

fail = fail + 1

|

||||

if fail > 5 : break

|

||||

if fail > 5:

|

||||

break

|

||||

continue

|

||||

|

||||

# Accept with or without < >

|

||||

email = None

|

||||

x = re.findall('\nFrom: .* <(\S+@\S+)>\n', hdr)

|

||||

if len(x) == 1 :

|

||||

x = re.findall("\nFrom: .* <(\S+@\S+)>\n", hdr)

|

||||

if len(x) == 1:

|

||||

email = x[0]

|

||||

email = email.strip().lower()

|

||||

email = email.replace('<','')

|

||||

email = email.replace("<", "")

|

||||

else:

|

||||

x = re.findall('\nFrom: (\S+@\S+)\n', hdr)

|

||||

if len(x) == 1 :

|

||||

x = re.findall("\nFrom: (\S+@\S+)\n", hdr)

|

||||

if len(x) == 1:

|

||||

email = x[0]

|

||||

email = email.strip().lower()

|

||||

email = email.replace('<','')

|

||||

email = email.replace("<", "")

|

||||

|

||||

sent_at = None

|

||||

y = re.findall('\nDate: .*, (.*)\n', hdr)

|

||||

if len(y) == 1 :

|

||||

y = re.findall("\nDate: .*, (.*)\n", hdr)

|

||||

if len(y) == 1:

|

||||

tdate = y[0]

|

||||

tdate = tdate[:26]

|

||||

try:

|

||||

sent_at = parsemaildate(tdate)

|

||||

except:

|

||||

print(text)

|

||||

print('Parse fail',tdate)

|

||||

print("Parse fail", tdate)

|

||||

fail = fail + 1

|

||||

if fail > 5 : break

|

||||

if fail > 5:

|

||||

break

|

||||

continue

|

||||

|

||||

subject = None

|

||||

z = re.findall('\nSubject: (.*)\n', hdr)

|

||||

if len(z) == 1 : subject = z[0].strip().lower()

|

||||

z = re.findall("\nSubject: (.*)\n", hdr)

|

||||

if len(z) == 1:

|

||||

subject = z[0].strip().lower()

|

||||

|

||||

# Reset the fail counter

|

||||

fail = 0

|

||||

print(' ',email,sent_at,subject)

|

||||

cur.execute('''INSERT INTO Messages (id, email, sent_at, subject, headers, body)

|

||||

VALUES ( %s, %s, %s, %s, %s, %s ) ON CONFLICT DO NOTHING''',

|

||||

( start, email, sent_at, subject, hdr, body))

|

||||

if count % 50 == 0 : conn.commit()

|

||||

if count % 100 == 0 : time.sleep(1)

|

||||

print(" ", email, sent_at, subject)

|

||||

cur.execute(

|

||||

"""INSERT INTO Messages (id, email, sent_at, subject, headers, body)

|

||||

VALUES ( %s, %s, %s, %s, %s, %s ) ON CONFLICT DO NOTHING""",

|

||||

(start, email, sent_at, subject, hdr, body),

|

||||

)

|

||||

if count % 50 == 0:

|

||||

conn.commit()

|

||||

if count % 100 == 0:

|

||||

time.sleep(1)

|

||||

|

||||

conn.commit()

|

||||

cur.close()

|

||||

|

|

@ -160,4 +181,4 @@ cur.close()

|

|||

# update messages set sender = substring(headers, '\nFrom: [^\n]*<([^>]*)');

|

||||

|

||||

|

||||

# explain analyze select id, body from messages where to_tsquery('english','today') @@ to_tsvector('english',body) limit 3;

|

||||

# explain analyze select id, body from messages where to_tsquery('english','today') @@ to_tsvector('english',body) limit 3;

|

||||

|

|

|

|||

|

|

@ -15,3 +15,5 @@ data = '''

|

|||

info = json.loads(data)

|

||||

print('Name:', info["name"])

|

||||

print('Hide:', info["email"]["hide"])

|

||||

|

||||

|

||||

|

|

|

|||

Loading…

Reference in New Issue