3.7 KiB

Reinforcement learning and LLM-powered applications

RLHF helps to align the model with human values. For example, LLMs might have a challenge in that it's creating sometimes harmful content or like a toxic tone or voice. By aligning the model with human feedback and using reinforcement learning as an algorithm. You can help to align the model to reduce that and to align towards, less harmful content and much more helpful content as well.

Reinforcement learning from human feedback (RLHF)

RLHF helps to align the model with human values. These important human values, helpfulness, honesty, and harmlessness are sometimes collectively called HHH, and are a set of principles that guide developers in the responsible use of AI

One potentially exciting application of RLHF is the personalizations of LLMs, where models learn the preferences of each individual user through a continuous feedback process. This could lead to exciting new technologies like individualized learning plans or personalized AI assistants.

how RLHF works?

Reinforcement learning is a type of machine learning in which an agent learns to make decisions related to a specific goal by taking actions in an environment, with the objective of maximizing some notion of a cumulative reward.

The text is, for example, helpful, accurate, and non-toxic. The environment is the context window of the model The space in which text can be entered via a prompt At any given moment, the action that the model will take, meaning which token it will choose next, depends on the prompt text in the context and the probability distribution over the vocabulary space. The reward is assigned based on how closely the completions align with human preferences. Reward model, to classify the outputs of the LLM and evaluate the degree of alignment with human preferences. It plays a central role in how the model updates its weights over many iterations. The sequence of actions and states is called a rollout.

RLHF: Obtaining feedback from humans

The clarity of your instructions can make a big difference on the quality of the human feedback you obtain.

Learning to summarize from human feedback

Constitutional AI is a method for training models using a set of rules and principles that govern the model's behavior.

Constitutional AI: Harmlessness from AI Feedback paper

LLM Powered applications

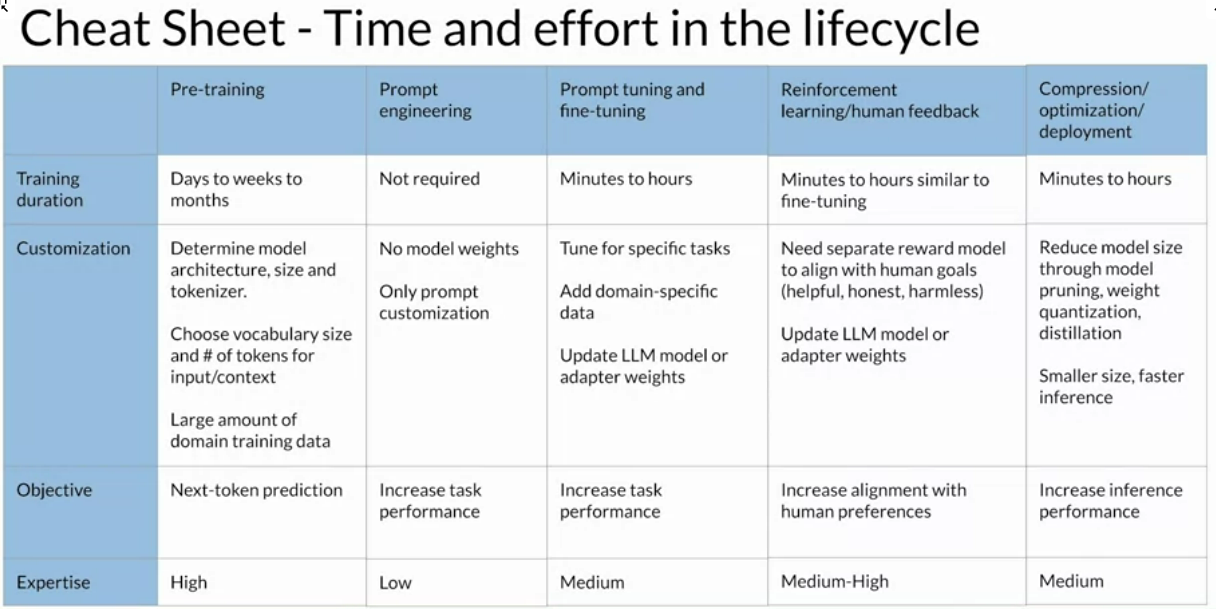

Introduction Model optimizations for deployment

Increase performance -> reduce LLM size, which reduces inference latency The challenge is to reduce the size of the model while still maintaining model performance.

Video has a lot of information

Langchain is an example of Orchestration Library

Retrieval Augmented Generation (RAG) is a great way to overcome the knowledge cutoff (because the world has changes since the model was trained with data current to that date) issue and help the model update its understanding of the world.

The external data store could be a vector store,a SQL database, CSV files, Wikis or other data storage format.