1.8 KiB

| title | updated | created | latitude | longitude | altitude |

|---|---|---|---|---|---|

| WK 2 Data wharehouse | 2021-09-20 11:29:16Z | 2021-09-20 09:08:49Z | 52.09370000 | 6.72510000 | 0.0000 |

BigQuery

BigQuery organizes data tables into units called datasets

The project is what the billing is associated with.

To run a query, you need to be logged into the GCP console. You'll run a query in your own GCP project and the query charges are then build to your project.

In order to run a query in a project, you need Cloud IAM permissions to submit a job.

Access control is through Cloud IAM, and is that the data set level and applies to all tables in the dataset. BigQuery provides predefined roles for controlling access to resources. By defining authorized views and row-level permissions to give different users different roles for for the same data.

The project is what the billing is associated with.

To run a query, you need to be logged into the GCP console. You'll run a query in your own GCP project and the query charges are then build to your project.

In order to run a query in a project, you need Cloud IAM permissions to submit a job.

Access control is through Cloud IAM, and is that the data set level and applies to all tables in the dataset. BigQuery provides predefined roles for controlling access to resources. By defining authorized views and row-level permissions to give different users different roles for for the same data.

BigQuery data sets can be regional or multi-regional.

Logs and BigQuery are immutable and are available to be exported to Stackdriver.

Loading data into BigQuery

EL, ELT, ETL

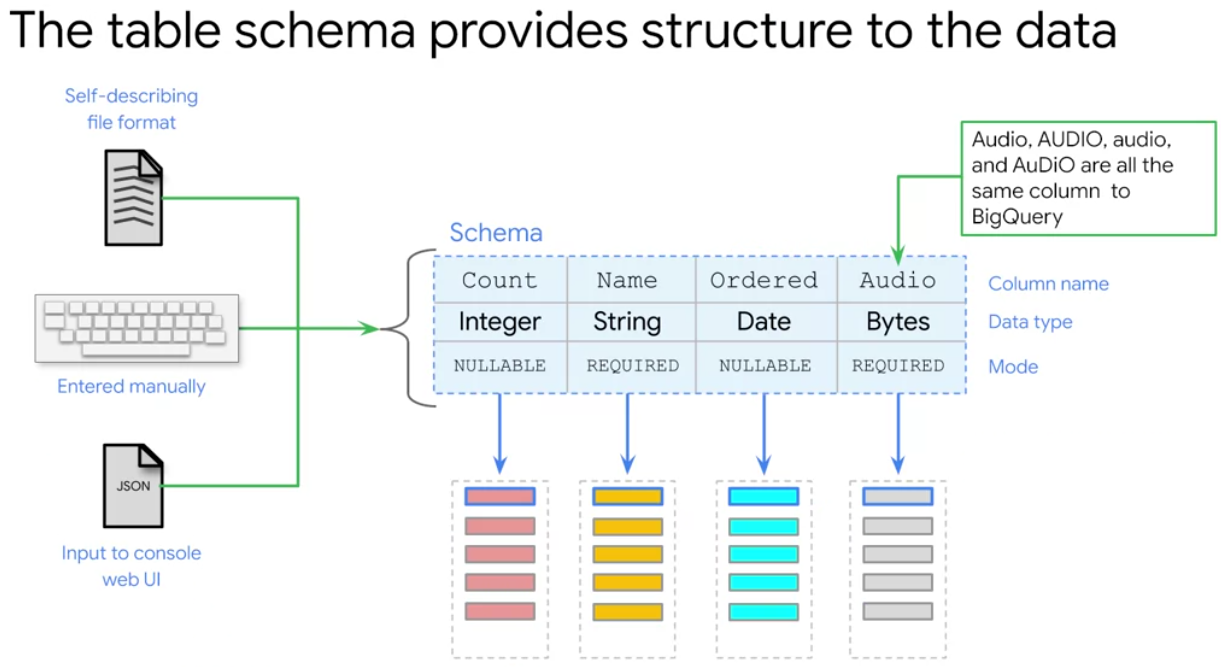

If your data is an Avro format, which is self-describing BigQuery can determine the schema directly, if the data is in JSON or CSV format BigQuery can auto detect the schema, but manual verification is recommended.

If your data is an Avro format, which is self-describing BigQuery can determine the schema directly, if the data is in JSON or CSV format BigQuery can auto detect the schema, but manual verification is recommended.

Backfilling data means adding a missing past data to make a dataset complete with no gaps, and to keep all analytic processes working as expected.